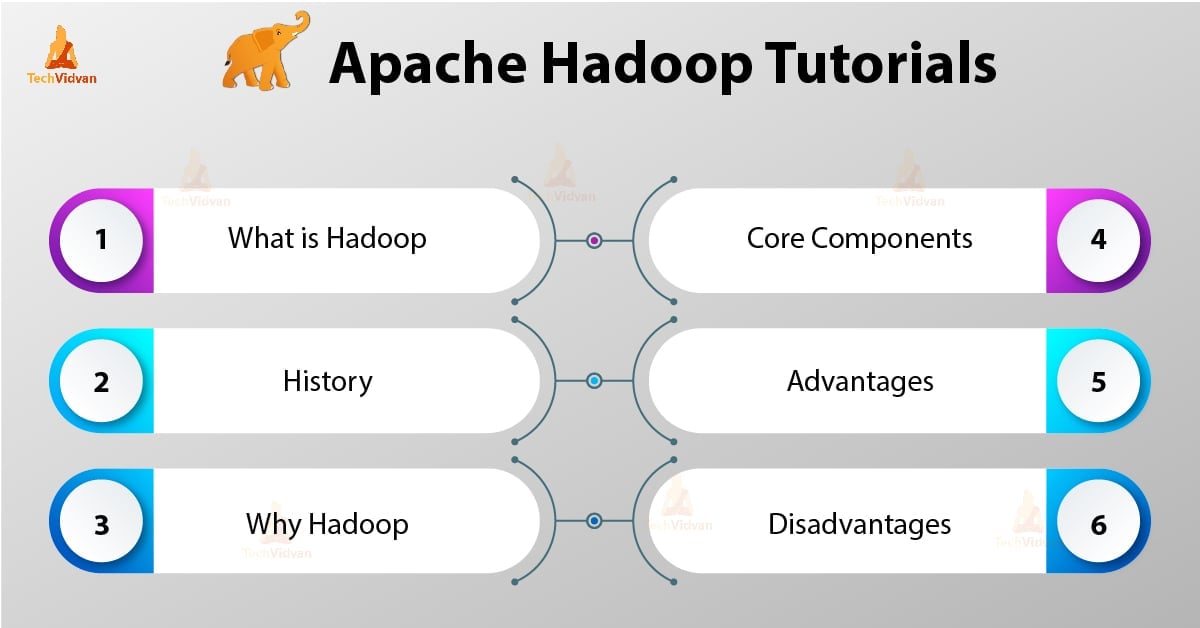

The main goal of this Hadoop Tutorial is to describe each and every aspect of Apache Hadoop Framework. Basically, this tutorial is designed in a way that it would be easy to Learn Hadoop from basics.

In this article, we will do our best to answer questions like what is Big data Hadoop, What is the need of Hadoop, what is the history of Hadoop, and lastly advantages and disadvantages of Apache Hadoop framework.

Our hope is that after reading this article, you will have a clear understanding of what is a Hadoop Framework.

What is Hadoop?

It is an open source software framework for distributed storage & processing of huge amount of data sets. Open source means it is freely available and even we can change its source code as per your requirements.

It also makes it possible to run applications on a system with thousands of nodes. It’s distributed file system has the provision of rapid data transfer rates among nodes. It also allows the system to continue operating in case of node failure.

Hadoop provides-

- The Storage layer – HDFS

- Batch processing engine – MapReduce

- Resource Management Layer – YARN

Hadoop – History

In 2003, Google launches project Nutch to handle billions of searches. Also for indexing millions of web pages. In October 2003 Google published GFS (Google File System) paper, from that paper Hadoop was originated.

In 2004, Google releases paper with MapReduce. And in 2005, Nutch used GFS and MapReduce to perform operations.

In 2006, Computer scientists Doug Cutting and Mike Cafarella created Hadoop. In February 2006 Doug Cutting joined Yahoo. This provided resources and the dedicated team to turn Hadoop into a system that ran at web scale. In 2007, Yahoo started using Hadoop on a 100 node cluster.

In January 2008, Hadoop made its own top-level project at Apache, confirming its success. Many other companies used Hadoop besides Yahoo!, such as the New York Times and Facebook.

In April 2008, Hadoop broke a world record to become the fastest system to sort a terabyte of data. Running on a 910-node cluster, In sorted one terabyte in 209 seconds.

In December 2011, Apache Hadoop released version 1.0. In August 2013, version 2.0.6 was available. Later in June 2017, Apache Hadoop 3.0.0-alpha4 is available. ASF (Apache Software Foundation) manages and maintains Hadoop’s framework and ecosystem of technologies.

Why Hadoop?

As we have learned the Introduction, Now we are going to learn what is the need of Hadoop?

It emerged as a solution to the “Big Data” problems-

a. Storage for Big Data – HDFS Solved this problem. It stores Big Data in Distributed Manner. HDFS also stores each file as blocks. Block is the smallest unit of data in a filesystem.

Suppose you have 512MB of data. And you have configured HDFS such that it will create 128Mb of data blocks. So HDFS divide data into 4 blocks (512/128=4) and stores it across different DataNodes. It also replicates the data blocks on different datanodes.

Hence, storing big data is not a challenge.

b. Scalability – It also solves the Scaling problem. It mainly focuses on horizontal scaling rather than vertical scaling. You can add extra datanodes to HDFS cluster as and when required. Instead of scaling up the resources of your datanodes.

Hence enhancing performance dramatically.

c. Storing the variety of data – HDFS solved this problem. HDFS can store all kind of data (structured, semi-structured or unstructured). It also follows write once and read many models.

Due to this, you can write any kind of data once and you can read it multiple times for finding insights.

d. Data Processing Speed – This is the major problem of big data. In order to solve this problem, move computation to data instead of data to computation. This principle is Data locality.

Hadoop Core Components

Now we will learn the Apache Hadoop core component in detail. It has 3 core components-

- HDFS

- MapReduce

- YARN(Yet Another Resource Negotiator)

Let’s discuss these core components one by one.

a. HDFS

Hadoop distributed file system (HDFS) is the primary storage system of Hadoop. HDFS store very large files running on a cluster of commodity hardware. It follows the principle of storing less number of large files rather than the huge number of small files.

Stores data reliably even in the case of hardware failure. It provides high-throughput access to the application by accessing in parallel.

Components of HDFS:

- NameNode –It works as Master in the cluster. Namenode stores meta-data. A number of blocks, replicas and other details. Meta-data is present in memory in the master. NameNode maintains and also manages the slave nodes, and assigns tasks to them. It should deploy on reliable hardware as it is the centerpiece of HDFS.

- DataNode – It works as Slave in the cluster. In HDFS, DataNode is responsible for storing actual data in HDFS. DataNode performs read and write operation as per request for the clients. DataNodes can also deploy on commodity hardware.

b. MapReduce

MapReduce is the data processing layer of Hadoop. It processes large structured and unstructured data stored in HDFS. MapReduce also processes a huge amount of data in parallel.

It does this by dividing the job (submitted job) into a set of independent tasks (sub-job). MapReduce works by breaking the processing into phases: Map and Reduce.

- Map – It is the first phase of processing, where we specify all the complex logic code.

- Reduce –It is the second phase of processing. Here we specify light-weight processing like aggregation/summation.

c. YARN

YARN provides the resource management. It is the operating system of Hadoop. It responsible for managing and monitoring workloads, also implementing security controls. Apache YARN is also a central platform to deliver data governance tools across the clusters.

YARN allows multiple data processing engines such as real-time streaming, batch processing etc.

Components of YARN:

- Resource Manager – It is a cluster level component and runs on the Master machine. It manages resources and schedule applications running on the top of YARN. It has two components: Scheduler & Application Manager.

- Node Manager –It is a node level component. It runs on each slave machine. It continuously communicate with Resource Manager to remain up-to-date

Advantages of Hadoop

Let’s now discuss various Hadoop advantages to solve the big data problems.

- Scalability –By adding nodes we can easily grow our system to handle more data.

- Flexibility – In this framework, you don’t have to preprocess data before storing it. You can store as much data as you want and decide how to use later.

- Low-cost – Open source framework is free and runs on low-cost commodity hardware.

- Fault tolerance – If nodes go down, then jobs are automatically redirected to other nodes.

- Computing power – It’s distributed computing model processes big data fast. The more computing nodes you use more processing power you have.

Disadvantages of Hadoop

Some Disadvantage of Apache Hadoop Framework is given below-

- Security concerns – It can be challenging in managing the complex application. If the user doesn’t know how to enable platform who is managing the platform, then your data could be a huge risk. Since, storage and network levels Hadoop are missing encryption, which is a major point of concern.

- Vulnerable by nature – The framework is written almost in java, most widely used language. Java is heavily exploited by cybercriminals. As a result, implicated in numerous security breaches.

- Not fit for small data –Since, it is not suited for small data. Hence, it lacks the ability to efficiently support the random reading of small files.

- Potential stability issues – As it is an open source framework. This means that it is created by many developers who continue to work on the project. While constantly improvements are made, It has stability issues. To avoid these issues organizations should run on the latest stable version.

Conclusion

In conclusion, we can say that it is the most popular and powerful Big data tool. It stores huge amount of data in the distributed manner.

And then processes the data in parallel on a cluster of nodes. It also provides world’s most reliable storage layer- HDFS. Batch processing engine MapReduce and Resource management layer- YARN.

Hence, these daemons ensure Hadoop functionality.