Sqoop vs Flume – Battle Between Hadoop ETL tools

We all know that Apache Sqoop and Apache Flume both are used for transferring data from different sources to Hadoop DFS. Here arises a question: which to use when?

This Sqoop vs Flume tutorial first gives an introduction to Sqoop and Flume. Later on, the article will provide a comparison chart between Apache Sqoop and Apache Flume.

Let us start with an introduction to Apache Flume.

What is Apache Flume?

Apache Flume is a framework used for collecting, aggregating, and moving data from different sources like web servers, social media platforms, etc. to central repositories like HDFS, HBASE, or Hive. It is mainly designed for streaming logs into the Hadoop environment.

Features of Apache Flume

- Apache Flume gives high throughput and low latency.

- It has a declarative configuration and provides extensibility.

- Flume is fault-tolerant, stream-oriented, and linearly scalable.

- It is a highly flexible tool.

What is Apache Sqoop?

Apache Sqoop is a framework used for transferring data from Relational Database to Hadoop Distributed File System or HBase or Hive.

It is specially designed for moving data between RDBMS and Hadoop ecosystems. Flume works with various databases like MySQL, Teradata, MySQL, HSQLDB, Oracle.

Features of Apache Sqoop

- Sqoop supports bulk data import; that is, it can import an individual table or complete database into HDFS. The files are stored in HDFS.

- It parallelizes the data transfer for optimal system utilization.

- Sqoop provides direct input, that is, import tables directly into the HBase and Hive.

- It makes data analysis efficient.

Let us now explore the difference between Apache Sqoop and Apache Flume

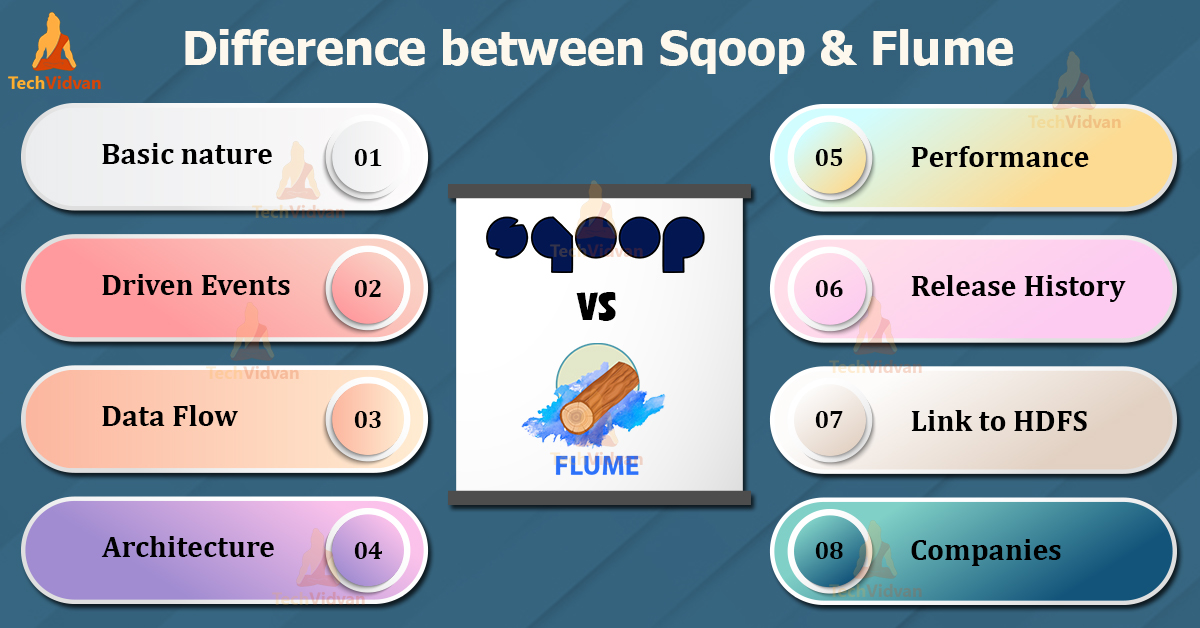

Difference between Apache Sqoop and Apache Flume

1. Basic nature

Sqoop: It is basically designed to work with different types of RDBMS, which have JDBC connectivity. Sqoop imports data from the relational databases like MySQL, Oracle, etc. to the Hadoop ecosystem.

Flume: It is basically designed for transferring streaming data such as log files from different sources to the Hadoop ecosystem.

2. Driven Events

Sqoop: Apache Sqoop data load is not driven by events.

Flume: Apache Flume is completely event-driven.

3. Data Flow

Sqoop: Sqoop is specifically for transferring data parallelly from relational databases to Hadoop.

Flume: Flume works with streaming data sources. It is for collecting and aggregating data from different sources because of its distributed nature.

4. Architecture

Sqoop: Apache Sqoop follows connector-based architecture. Sqoop Connectors know how to connect to the different data sources.

Flume: Apache Flume follows agent-based architecture. The code written in Flume is known as an agent which is responsible for data fetching.

5. Performance

Sqoop: Apache Sqoop reduces the processing loads and excessive storage by transferring them to the other systems. Thus have fast performance.

Flume: Apache Flume is highly robust, fault-tolerant, and has a tunable reliability mechanism for failover and recovery.

6. Where to use

Sqoop: We use Apache Sqoop when we need to copy data and generate the analytical outcomes faster.

Flume: Flume is generally for pulling data from different sources to analyze the patterns, perform sentiment analysis using server logs and social media data.

7. Release History

Sqoop: The first version of Sqoop was released in March 2012. Its current stable release is 1.4.7

Flume: The first stable version of Flume which is 1.2.0 was released in June 2012. Its current stable release is 1.9.0.

8. When to use

Sqoop: It is considered an ideal fit if the data is available in Oracle, Teradata, MySQL, PostgreSQL or any other database with JDBC connectivity.

Flume: It is an ideal fit for moving bulk of streaming data from different sources like JMS or spooling directories.

9. Link to HDFS

Sqoop: Hadoop Distributed File System is the destination while importing data using Sqoop.

Flume: In Flume, data flows to Hadoop Distributed FileSystem through multiple channels

10. Companies

Sqoop: Companies like Apollo Group education, Coupons.com, etc use Apache Sqoop.

Flume: Companies like Goibibo, Mozilla, Capillary technologies, etc use Apache Flume.

Summary

I hope that this article has answered your question. We use Sqoop when we need to transfer the data from RDBMS with JDBC connectivity to the HDFS. On the other hand, we use Apache Flume for transferring streaming data from log servers to HDFS.

Sqoop follows connector based architecture, whereas Flume follows agent-based architecture. Flume is event-driven, whereas Sqoop is not event-driven. The article has enlisted all the major differences between Apache Flume and Sqoop.