Top 10 Data Science Tools to Master the Art of Handling Data

Most Used Data Science Tools by Expert’s

Data Science has become a popular job title with its increasing demand in the business world. But with great opportunities comes great responsibilities. Thus a job of Data Scientist also requires many skills and qualities.

A Data Scientist needs to master the fields of Machine Learning, statistics and probability, analytics, and programming. He needs to perform many tasks such as prepare the data, analyze the data, built models, draw meaningful conclusions, predict the results and optimize the results.

For performing these various tasks efficiently and quickly, the Data Scientists use different Data Science tools. These tools will help them in performing the different processes of a Data Science life-cycle. These will also ease the various tasks involved in a Data Science project and help in producing the best results for the organization.

Although these tools do not require professional programming skills for being a Data Scientist. They provide many functionalities and advanced features that help you to do so easily.

In this tutorial, we will explore the best Data Science tools which most of the leading organizations are using for implementing Data Science.

Top Data Science Tools

Following is the list of top Data Science tools.

1. Tableau

Tableau is another popular tool for data visualization. It transforms the raw data in such a format that it becomes easy to understand and use. One of the plus points of using this Data Science tool is that it does not require any knowledge of programming for using it. Because of this reason, people from different backgrounds such as Business, Research, Industry, etc. are using it.

Along with data visualization, it is also used for gaining meaningful insights from the raw data. Tableau provides a wide range of tools. Some of these tools are for developers and the others are for the purpose of sharing the findings, visuals, reports, dashboards, etc. The developer tools include Tableau Desktop and Tableau Public.

And, the tools for sharing are Tableau Online, Tableau Server and Tableau Reader.

Also, the main features of Tableau are to combine the data from multiple discrete sources and convert it into a useful dataset and then perform real-time analysis on the data.

2. Excel

Microsoft Excel is one of the well-known tools in the Data Science industry. All the organizations including both small and large use excel in their Data Science projects. If you are new to the field of Data Science then you can start with learning excel as it is the most common tool. It has its applications in both financial analysis and business planning.

Excel provides a wide range of functions and other tools that can be used to extract insights from the data as well as to analyze it. But excel is more suitable for handling smaller data because when comes to extremely large datasets, excel does not prove to be that efficient.

R and Python prove to be the most suitable languages for working with excel. You can use other data science languages also.

3. Rapidminer

RapidMiner is one of the most widely used tools for Data Science. This Data Science tool is designed to support data mining, text mining, multimedia mining, Machine Learning, business analytics, and predictive analysis. It can provide an environment for carrying out the various stages of a Data Science life-cycle. This includes many predefined functions for performing data preprocessing, modeling, evaluation, and deployment.

The tool comes with a user-friendly GUI which is inspired by the block-diagram approach. You just have to arrange the blocks in the correct manner and you can execute a large number of algorithms without writing any code. This Data Science tool allows the user to enter the raw datasets and then it analyzes all the data automatically.

However, the tool is a bit complex to use but it provides you the resources to run more than 1500 operations. It is written in JAVA. The older versions of the tool, that is, (older than v6) are free to use however, the latest versions require a license.

It provides various tools for different purposes which are as follows:

4. DataRobot

DataRobot is a tool that provides an environment for automated Machine Learning, that is, the tool automatically performs all the steps of a Machine Learning life-cycle.

Using this tool, extracting the insights from the data and identifying the trends in extremely large datasets becomes much easier and faster. This can help the Data Scientists to make much better and accurate predictions.

The tool provides all the required resources to build the model, train the model, evaluate the model and finally deploy it. It improves the productivity of the organization by building a large number of models in lesser time.

This Data Science tool is very easy to use and anyone who has knowledge of Data Science can use it. It supports almost all the most commonly used Machine Learning algorithms and several popular Data Science tools such as Apache Spark, H2O, R programming, Tensorflow, etc.

DataRobot builds ready to deploy models, that is, there is no need for further testing or evaluation. Also for every model which you build using DataRobot, it automatically generates a document that contains all the details of the model.

It includes the development of the model, assumptions, limitations of the model, performance results and points considered while evaluation.

5. QlikView

QlikView is one of the most popular and leading tools used for Data Visualization and Business Intelligence and more than 24,000 organizations are using it. This Data Science tool was designed for developing highly interactive guided analytics applications for solving business problems efficiently and is very easy to use.

QlikView tool has given an extraordinary boom to the analytics field in the market. The tool helps in extracting insights and identifying relationships in the data. This Data Science tool helps you to gain greater insights in less time thus increasing productivity.

Moreover, it does not require any professional skills to build an analytic app using the tool.

6. H20

H2O is an open-source Machine Learning tool provided by the H2O.ai company. The company also provides a set of other tools for Machine Learning which are Deep Water, Sparkling water, Steam, Driverless AI.

H2O is one of the best tools available for Machine Learning and is the first choice of many leading enterprises all over the world. It supports all the most commonly used Machine Learning algorithms as well as the AutoML feature.

Most of the Data Scientists prefer R and Python for doing Data Science. Thus H2O supports the interfaces in R, Python, Java, JSON, and Scala. It is written in Java. H2O also provides an interface of its own with the name “FLOW”. It enables the Data Scientists to gather and import the data from multiple discrete sources, build models, evaluate them, etc. very easily and quickly.

Using Flow, you can also share your findings and other sources with other Data Scientists which will help to improve the results.

Many of the market giants like Microsoft, IBM, Anaconda, Databricks, etc. are using H2O for making faster and better predictions by easily extracting insights from the data.

7. Tensor Flow

Normally Machine Learning algorithms are quite difficult to understand and implement. But this tool will help you to perform the entire Machine Learning life-cycle easily.

Tensorflow is an open-source Data Science tool available for free. It is mainly used for implementing Machine Learning algorithms on a large scale. It allows developers to make large-scale neural networks with many layers.

TensorFlow is especially used for: Classification, Perception, Understanding, Discovering, Prediction and Creation

Tensorflow helps you to easily collect the data, select the best fit model, train the model, make predictions and finally optimize the predictions based on the model’s performance. It can also train the model for implementing some complex algorithms like image recognition, digit classification, neural networks, etc.

The tool uses Python for programming.

The biggest advantage of using Tensorflow is “abstraction”, that is, the tool allows the user to focus on the central logic of the application and all the background processes and tasks are handled automatically.

8. BigML

BigML is a platform used by a large number of organizations for applying Machine Learning algorithms easily. It comes with a user-friendly web interface which is very easy to use. You just have to import your data on which you want to predict something.

The tool provides the required resources for all sorts of prediction based problems and helps you to identify and apply the most suitable Machine Learning algorithms. It helps you to select the best Machine Learning algorithms that will help the organization to benefit the most.

The tool helps to minimize the cost, complexity and technical errors in your project easily.

BigML has a wide range of applications across various industries such as aerospace and automotive industries, the entertainment field, food, healthcare, transport, finance, etc. The tool provides Machine Learning services on both platforms, that is, on the cloud as well as on-premise.

You can access the tool without having a detailed knowledge of Machine Learning because of the many advanced options provided to the user.

9. Snowflake

In today’s era, the data is being generated at an alarming rate. Earlier, the Data Scientists had to face many problems because of the lack of resources to store this large amount of data. Now, there are many tools available to solve these issues. One of these tools is Snowflake.

Snowflake provides a platform for cloud data storage in the form of software as a service (SaaS). In other words, it provides Data Warehousing services. It uses SQL for managing the database over the cloud.

For producing efficient results and more accurate predictions, you require a large amount of data, that is, as large as the dataset as better as the result and predictions.

When you use Snowflake, then it handles all the responsibilities to manage your data such as where to store the data, how to store it, etc. It hides all these details from the user. The only way to access your data is by firing SQL queries.

The tool provides several services for storage and computation of data at a very pocket-friendly price to fulfill the demands of the user to the fullest. It combines the features of several popular Data Science tools like DataRobot, H2O.ai, Aws, etc. It supports the programming languages Scala, R, Python, and Java.

The main advantage of using it is scalability, that is, you can upgrade and downgrade your resources according to your needs.

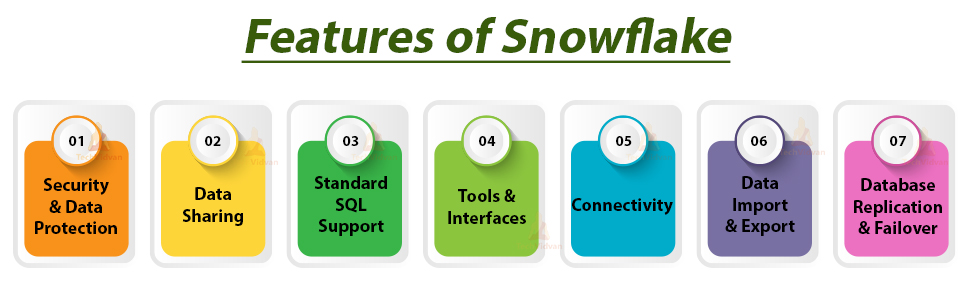

The different features of Snowflake are:

10. Trifacta

One of the biggest challenges for Data Scientists is the management of unstructured data.

Today a large part of the raw data is available as unstructured data, that is, in the form of images, videos, audios, etc. which is very difficult to handle.

Thus, for better results, it is very important to convert this data in such a form that it becomes more easy to manage, analyze, and draw useful conclusions out of it.

The process of transforming raw data into a more usable form by applying various methods is known as Data Wrangling.

Trifacta is an open-source tool used for Data Wrangling. It enables the Data Scientists to completely focus on the actual analysis of the data by giving them already prepared data.

Thus the Data Scientists do not require to struggle for preparing the raw dataset. It also helps the Data Scientists with data analysis and Machine Learning algorithms.

Summary

Data Science uses a combination of various skills and a number of tools that are available to simplify the process. Also, most of these tools do not require much experience in using them. Thus everyone who knows how to apply Data Science can use them. You can run very complex algorithms with just one or two lines of code.

These tools help the Data Scientists to handle very large datasets and find solutions to complex problems for more efficient results.