Thread Scheduler in Java

In this article, we aim to shed light on the critical role thread scheduling plays in shaping our computing experiences. We’ll dissect the inner workings of scheduling algorithms, dissecting their strengths and limitations. By unravelling the threads behind thread scheduling, we hope to inspire a deeper appreciation for the complex choreography that allows our devices to execute tasks seamlessly, bringing us one step closer to unravelling the enigmatic heartbeat of modern computing.

Thread Scheduler

If more than one thread is waiting for a chance to run, the Thread Scheduler will determine which thread should be executed. The exact algorithm followed by the Thread scheduler will not be determined, as the algorithm followed by each Thread scheduler varies from JVM to JVM. Hence, in multithreading, we can’t guarantee the exact output; every time we run the code, we will get different outputs.

Algorithms used by the Thread Scheduler:

Round-Robin Scheduling:

In this algorithm, each thread is given a fixed time slice (quantum) to execute. When the time slice expires, the scheduler interrupts the thread and moves it to the back of the queue. The next thread in line gets a chance to run.

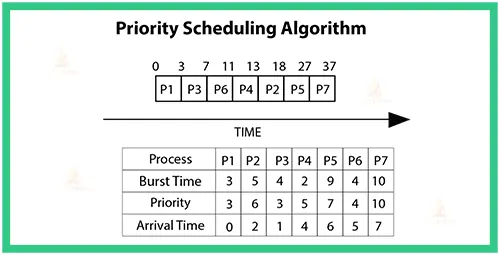

Priority-Based Scheduling:

Threads are assigned priority levels, and the scheduler selects the highest-priority thread to execute. This approach can lead to priority inversion, where lower-priority threads block higher-priority ones, impacting overall system performance. To mitigate this, techniques like priority inheritance and priority ceiling protocols are employed.

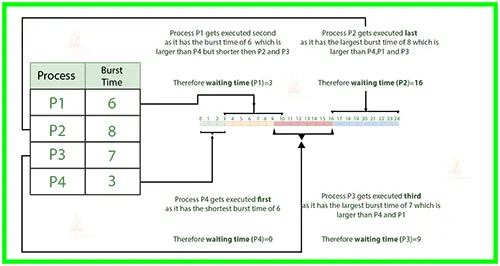

Shortest Job Next (SJN) Scheduling:

Also known as Shortest Job First (SJF) or shortest remaining time scheduling, this algorithm selects the thread with the smallest execution time remaining. SJN aims to minimize average waiting time and turnaround time, but it requires knowledge of thread execution times, which can be challenging to estimate accurately.

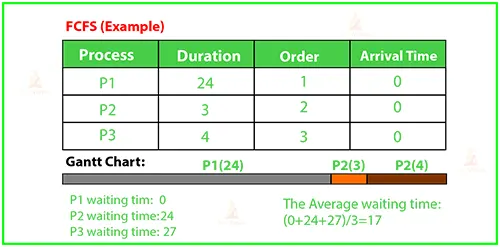

First-Come, First-Served (FCFS) Scheduling:

Threads are executed in the order they arrive in the ready queue. While simple to implement, FCFS may lead to the “convoy effect,” where a long-running thread prevents shorter tasks from executing, causing inefficiencies.

Multilevel Queue Scheduling:

Threads are divided into multiple queues based on priority, and each queue may have its own scheduling algorithm. This approach provides differentiation between threads of varying importance, such as interactive and background tasks.

Completely Fair Scheduler (CFS):

Found in the Linux kernel, CFS allocates CPU time based on the concept of fairness among threads. It attempts to distribute CPU time proportionally among threads, ensuring that each thread gets its fair share over time.

Deadline-Based Scheduling:

Primarily used in real-time systems, this algorithm assigns deadlines to threads and schedules them to meet their respective deadlines. Threads with tighter deadlines are given priority.

Multicore Scheduling:

With the advent of multi-core processors, thread scheduling extends to distributing threads across multiple cores. Algorithms focus on load balancing, minimizing contention, and optimizing cache utilization.

User-Level Threading Schedulers:

User-level threading (ULT) schedulers operate at the application level rather than within the operating system kernel. In this approach, the application itself manages its own threads and scheduling, bypassing the kernel’s thread management. User-level threads provide a level of control and flexibility for application developers, but they also come with trade-offs in terms of efficiency and system interaction.

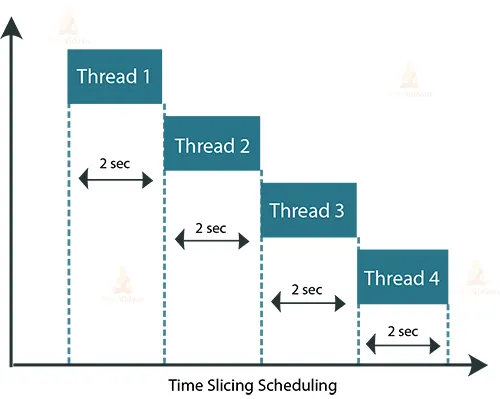

Time-Sliced Scheduling:

Time-sliced scheduling, also known as time-sharing or round-robin scheduling, involves dividing CPU time into fixed intervals called time slices or quanta. Each thread is allocated a time slice during which it can execute on the CPU. Once a thread’s time slice expires, it is preemptively moved out of the CPU, and another thread is given a chance to execute. The preempted thread is placed at the end of the scheduling queue and will receive another time slice when its turn comes up again. Time-sliced scheduling ensures that all threads get a fair share of CPU time, preventing any single thread from monopolizing the CPU for extended periods. This approach is particularly effective for scenarios where responsiveness and fairness are essential, such as interactive multitasking environments.

Preemptive Scheduling:

Preemptive scheduling takes the concept of time slicing further by allowing the scheduler to forcibly interrupt a running thread and switch to another thread. This interruption is known as preemption. In preemptive scheduling, threads can be preempted at any time, even before their time slice expires, based on certain events or priorities. Preemptive scheduling introduces finer control over thread execution and enables the operating system to respond quickly to high-priority tasks or events. It is especially useful in scenarios where real-time responsiveness, priority-based execution, and resource allocation are critical.

Working of Thread Scheduler:

Thread States:

Threads typically go through different states during their lifecycle, including “running,” “ready,” “blocked,” and “terminated.” The scheduler manages transitions between these states based on thread behaviour and external events.

Thread Queue Management:

Threads that are ready to execute but are waiting for CPU time are placed in a queue known as the “ready queue.” The scheduler maintains this queue, which holds threads with varying priorities or characteristics.

Scheduling Criteria:

The scheduler uses various criteria to make decisions about which thread to run next. These criteria can include thread priorities, execution history, expected CPU burst times, and any special requirements (e.g., real-time constraints).

Scheduling Algorithms:

Different scheduling algorithms dictate how threads are selected from the ready queue for execution. Common algorithms include:

1. Round-Robin Scheduling: Threads are given a fixed time slice (quantum) to execute, and they rotate in and out of the CPU in a circular fashion.

2. Priority-Based Scheduling: Threads are assigned priorities, and the scheduler runs the highest-priority thread that is ready to execute.

3. Shortest Job Next (SJN) Scheduling: The thread with the shortest estimated execution time is selected next.

4. Multilevel Queue Scheduling: Threads are divided into priority-based queues, and the scheduler chooses threads from different queues based on their priorities.

5. Preemption: Many modern schedulers use preemption, which allows the scheduler to forcibly interrupt a running thread to give another thread a chance to execute. Preemption is essential for enforcing priority-based scheduling and ensuring that high-priority threads get CPU time when needed.

Context Switching:

When the scheduler decides to switch from one thread to another, it performs a context switch. During a context switch, the current thread’s state is saved, and the state of the next thread to be executed is loaded. This involves updating registers, memory mappings, and other relevant information.

Interrupt Handling:

The scheduler interacts with hardware interrupts and timers to handle events such as I/O completion, timeouts, or hardware interrupts. These events can trigger context switches and affect thread execution.

Resource Management:

The scheduler manages various system resources that threads might contend for, such as memory, I/O devices, and synchronization primitives like locks and semaphores.

Dynamic Adjustment:

Some schedulers dynamically adjust priorities or time slices based on factors like thread behavior, past execution history, or system load. This adaptive approach helps optimize performance under varying conditions.

Real-Time Scheduling:

In real-time systems, where meeting deadlines is critical, the scheduler ensures that threads with strict timing constraints are given priority to execute within their specified time frames.

Example:

public class Main {

public static void main(String[] args) {

//creating thread1

MyRunnable1 r1=new MyRunnable1();

//creating thread2

MyRunnable2 r2=new MyRunnable2();

Thread t1=new Thread(r1);

//setting the name for the thread1

t1.setName("TechVidvan-Thread1");

t1.start();

Thread t2=new Thread(r2);

//setting the name for the thread2

t2.setName("TechVidvan-Thread2");

t2.start();

}

}

//defining the thread1

class MyRunnable1 implements Runnable {

int i=1;

public void run() {

while(i<4){

System.out.println(Thread.currentThread().getName()+" "+i);

i++;

}

}

}

//defining the thread2

class MyRunnable2 implements Runnable {

int i=1;

public void run() {

while(i<4){

System.out.println(Thread.currentThread().getName()+" "+i);

i++;

}

}

}

Output:

TechVidvan-Thread1 1

TechVidvan-Thread1 2

TechVidvan-Thread1 3

TechVidvan-Thread2 1

TechVidvan-Thread2 2

TechVidvan-Thread2 3

Two instances of Runnable implementations were created: MyRunnable1 and MyRunnable2. Two thread objects, t1, and t2, are created, each taking the corresponding Runnable as a parameter. The threads are named “TechVidvan-Thread1” and “TechVidvan-Thread2”, respectively. Both Threads started using the start() method. The MyRunnable1 class implements the Runnable interface. It contains a loop that runs as long as the variable i is less than 4. In each iteration, it prints the name of the current thread (Thread.currentThread().getName()) along with the current value of i. The MyRunnable2 class also implements the Runnable interface and has a similar loop that prints the name of the current thread along with the value of i.

Conclusion

In conclusion, the thread scheduler is an essential part of modern operating systems that enables efficient multithreading, concurrency, and resource utilization. Employing various scheduling policies, ensures fairness, responsiveness, and optimal execution of threads, contributing to the overall performance and stability of the system.