Connectors and Drivers in the World of Apache Sqoop

Sqoop is a tool which is designed for the data transfers between the RDBMS and the Hadoop ecosystem. One of the major advantages of Apache Sqoop is that it is easy to use, and we can use it with the varieties of systems (inside and outside) of the Hadoop ecosystem.

With Apache Sqoop, we can easily import or export data from all relational databases that support JDBC interface. This can be done by using the same command line arguments exposed by Apache Sqoop.

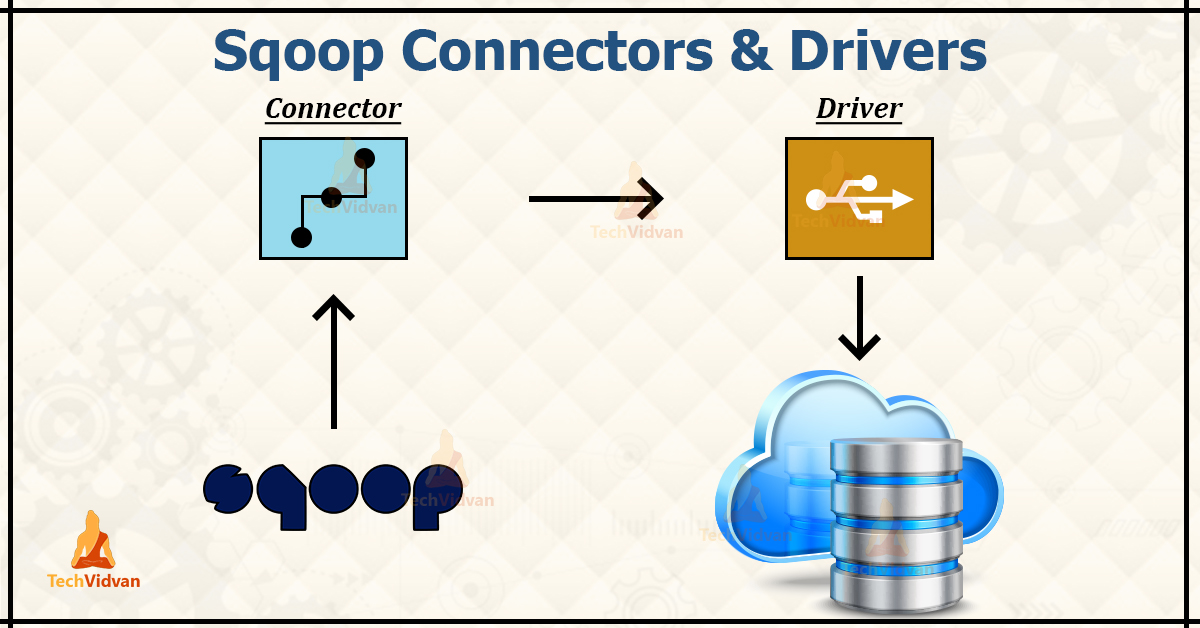

The users of various Apache projects within the Hadoop ecosystem use the words “driver” and “connector” interchangeably. But in the context of Apache Sqoop, these words mean completely different things. The connector and the driver both are needed for every Sqoop invocation.

In this article, you will explore each and every concept related to Sqoop driver and connector.

What is a Sqoop Driver?

In Apache Sqoop, the word driver refers to the JDBC Driver. Basically, JDBC is the standard Java API for accessing RDBMS and some data warehouses. The Java language prescribes only about classes and the methods contained in this interface and JDK does not have any default implementat

ion.

The database vendors have to write their own implementation, which will communicate with the corresponding database with its native protocol.

Since the database vendors create the drivers due to which they are usually offered with the restrictive licenses. This prohibits their shipping with the Apache Sqoop distribution. Thus, the user has to separately download the driver and install them into the Sqoop prior to its use.

What is Sqoop Connector?

For communicating with the RDBMS, there is a programming language known as Structured Query Language (SQL).

There is a standard that prescribes how the language SQL should look like, but every database has some of its own dialect of SQL. The basics were usually the same across all the databases, but there are some changes in some conditions.

Sqoop connectors allow Apache Sqoop to overcome the differences in the SQL dialects supported by the different relational databases along with providing the optimized data transfer.

A connector in Apache Sqoop is a pluggable piece which is used for fetching metadata about the transferred data (such as columns, data types, …) and to drive data transfer itself in a most efficient manner.

The connector will work on various databases out of the box. Apache Sqoop also ships with the specialized connectors for databases like MySQL, Microsoft SQL Server, PostgreSQL, Oracle, DB2 and Netezza.

Thus, generally we don’t have to download the extra connectors for starting data transfer. However, some special connectors are available on the internet that add support for the additional database systems or improve the performance of built-in connectors.

Let us explore special connectors for various databases.

Sqoop shipped Specialized Connectors for various Databases

1. MySQL JDBC Connector

It is the most basic connector shipped with Apache Sqoop. This connector uses only the JDBC interface for accessing metadata and transferring data. The MySQL JDBC Connector supports the upsert functionality by using the argument –update-mode allow insert.

2. MySQL Direct Connector

This connector allows the faster import and export to or from the MySQL by using the mysqldump and the mysqlimport tools functionality instead of using the SQL selects and inserts. For using the MySQL Direct Connector, we have to specify the –direct argument for our import/export job.

For example:

$ sqoop import --connect jdbc:mysql://localhost/demodb --table EMPLOYEES \

--direct

Limitations

- Currently, it does not support the import of the large object columns such as BLOB and CLOB.

- It does not support importing to HBase and Accumulo.

- This does not support the use of a staging table while exporting data.

- It does not support the import of views.

3. Microsoft SQL Connector

The Microsoft SQL Connector is the plugin provided by Microsoft for connecting with the SQL instance.

4. PostgreSQL Connector

The connection string for PostgreSQL Connector is:

jdbc:postgresql://<HOST>:<PORT>/<DATABASE_NAME>

For eg:

jdbc:postgresql://my_postgres_server_hostname:5432/my_database_name

The extra arguments supported by the PostgreSQL Connector is:

| Argument | Description |

| –schema <name> | This argument specifies the Scheme name that Apache Sqoop should use. The default is “public”. |

5. PostgreSQL Direct Connector

The PostgreSQL Direct Connector allows for the faster import and export to and from the PostgreSQL “COPY” command.

For using PostgreSQL Direct Connector, we have to specify the –direct argument for our import/export job.

The additional PostgreSQL argument supported by direct connector are:

Argument Description --boolean-true-string <str> Specify the string to be used for encoding the true value of the boolean columns. The default is "TRUE". --boolean-false-string <str> Specify the string to be used for encoding the false value of the boolean columns. The default value is "FALSE".

Limitations

- The direct connector currently doesn’t provide support for the import of large object columns such as BLOB and CLOB.

- It does not support importing to HBase and Accumulo.

- It does not support the import of views.

6. pg_bulkload connector

The pg_bulkload connector is the direct connector for exporting data into PostgreSQL. The pg_bulkload connector uses pg_bulkload.

The benefits of this connector is:

- Fast exports by passing the shared buffers and WAL

- Flexible error and records handling

- ETL feature with filter functions.

For export job execution the pg_bulkload connector requires the following conditions:

- We must install the pg_bulkload on the DB server and on all the slave nodes.

- The PostgreSQL JDBC must be present on the client node.

- There is a need for the superuser role of the PostgreSQL database for the execution of pg_bulkload.

7. Netezza Connector

The Netezza connector provides support for an optimized data transfer facility by using Netezza external tables feature.

Every map task of the Netezza connector’s import job works on the subset of the Netezza partitions and creates and uses the external table for data transport. For export jobs, it will use an external table for pushing data fast into the Netezza system.

Example of Sqoop import by using the Netezza external table feature.

$ sqoop import \

--direct \

--connect jdbc:netezza://nzhost:5480/sqoop \

--table nztable \

--username nzuser \

--password nzpass \

--target-dir hdfsdir

Example of Sqoop export with tab as the field terminator character.

$ sqoop export \

--direct \

--connect jdbc:netezza://nzhost:5480/sqoop \

--table nztable \

--username nzuser \

--password nzpass \

--export-dir hdfsdir \

--input-fields-terminated-by "\t"

8. Data Connector for Oracle and Hadoop

The Data Connector for Hadoop and Oracle is now included in Apache Sqoop. We can enable it by specifying the argument –direct for our import/export job.

The Data Connector for Oracle and Hadoop accepts the responsibility for following Sqoop Job types:

- Import jobs which are Non-Incremental.

- Export jobs

The Data Connector for Oracle and Hadoop doesn’t accept the responsibility for the other Sqoop job types like eval jobs etc.

This Connector accepts the responsibility for the Sqoop Jobs having the following attributes:

- Oracle-related

- Table-Based – Sqoop Jobs where a table argument is used and the specified object is a table.

- There must be at least 2 mappers. Sqoop jobs where Sqoop command-line doesn’t include: –num-mappers 1

The Sqoop parameter –connect parameter defines the Oracle instance or the Oracle RAC to be connected to. This parameter is required with all the Sqoop import and export commands.

The Data Connector for Oracle and Hadoop requires the associated connection string to be in a specific format which is dependent on whether the Oracle SID, Service or TNS name is defined or not.

We can use the TNS name based URL scheme for enabling the authentication using the Oracle wallets.

--connect jdbc:oracle:thin:@OracleServer:OraclePort:OracleSID --connect jdbc:oracle:thin:@//OracleServer:OraclePort/OracleService --connect jdbc:oracle:thin:@TNSName

Driver Sqoop arguments in the JDBC connection for various Databases

The driver argument in JDBC connection specifies the JDBC driver class that Sqoop must use for connecting to the database.

Syntax:

--driver <JDBC driver class>

The syntax which we can use depending on the type of the database we want to connect to:

Microsoft SQL Server: –driver com.microsoft.sqlserver.jdbc.SQLServerDriver

Greenplum: –driver org.postgresql.Driver

Netezza: –driver org.netezza.Driver

Oracle: –driver oracle.jdbc.driver.OracleDriver

IBM DB2: –driver com.ibm.db2.jcc.DB2Driver

IBM DB2 z/OS: –driver com.ibm.db2.jcc.DB2Driver

Teradata: –driver com.teradata.jdbc.TeraDriver

Aurora: –driver com.mysql.jdbc.Driver

Connect Sqoop arguments in the JDBC connection for various Databases

The connect argument in Apache Sqoop specifies the JDBC connection string that Apache Sqoop must use in order to connect to the database. The connection string must be based on the driver that we define in the driver argument.

Syntax:

--connect <JDBC connection string>

The Syntax which we can use depending on the type of the database we want to connect to:

Microsoft SQL Server: –connect jdbc:sqlserver://<host_name>:<port or named_instance>;databaseName=<database_name>

Greenplum: –connect jdbc:postgresql://<host_name>:<port>/<database_name>

Netezza: –connect “jdbc:netezza://<database_server_name>:<port>/<database_name>;schema=<schema_name>”

Oracle: –connect jdbc:oracle:thin:@<database_host_name>:<database_port>:<database_SID>

IBM DB2: –connect jdbc:db2://<host_name>:<port>/<database_name>

IBM DB2 z/OS: –connect jdbc:db2://<host_name>:<port>/<database_name>

Teradata: –connect jdbc:teradata://<host_name>/database=<database_name>

Aurora: –connect “jdbc:mysql://<host_name>:<port>/<schema_name>”

Summary

The article had explained what are Sqoop drivers and connectors. In this article, you had also explored the different connectors shipped with Sqoop for connecting to various databases.

You had learned about the connectors like MySQL JDBC connector, MySQL Direct connector, Microsoft SQL connector, PostgreSQL connector, Netezza connector, and some more.

The article had also enlisted the drivers and connectors argument which we can use depending on the database we want to connect to.