Hadoop Counters & Types of Counters in MapReduce

In our previous Hadoop blog we have provided you a detailed description of Hadoop InputFormat and OutputFormat. Now we are going to cover Hadoop Counters in detail. In this Hadoop tutorial we will discuss what is MapReduce Counters, What are their roles.

At last we will also cover the types of Counters in Hadoop MapReduce. Such as MapReduce Task Counter, File System Counters, FileInputFormat Counters, FileOutputFormat counters, Job Counters in MapReduce, Dynamic Counters in Hadoop.

Hadoop MapReduce

Before we start with Hadoop Counters, let us first learn what is Hadoop MapReduce?

MapReduce is the data processing layer of Hadoop. It processes large structured and unstructured data stored in HDFS. MapReduce also processes a huge amount of data in parallel. It does this by dividing the job (submitted job) into a set of independent tasks (sub-job). In Hadoop, MapReduce works by breaking the processing into phases: Map and Reduce.

- Map Phase- It is the first phase of the data process. In this phase, we specify all the complex logic/business rules/costly code.

- Reduce Phase- It is the second phase of processing. In this phase, we specify light-weight processing like aggregation/summation.

What is Hadoop Counters?

Counters in Hadoop are a useful channel for gathering statistics about the MapReduce job. Like for quality control or for application-level. Counters are also useful for problem diagnosis.

A Counter represents Apache Hadoop global counters, defined either by the MapReduce framework. Each counter in MapReduce is named by an “Enum”. It also has a long for the value.

Hadoop Counters validate that:

- It reads and written correct number of bytes.

- It has launched and successfully run correct number of tasks or not.

- Counters also validate that the amount of CPU and memory consumed is appropriate for our job and cluster nodes or not.

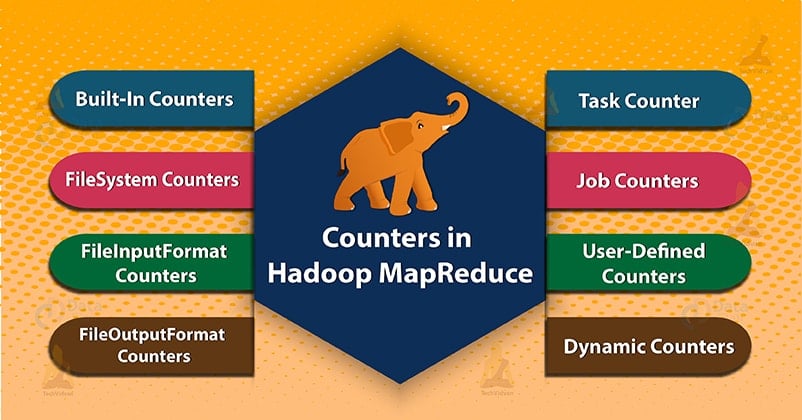

Types of Counters in MapReduce

2 types of MapReduce counters are:

- Built-in Counters

- User-Defined Counters/Custom counters

1. Built-in Counters in Hadoop MapReduce

Apache Hadoop maintains some built-in counters for every job. These counters report various metrics. There are counters for the number of bytes and records. Which allow us to confirm that the expected amount of input is consumed and the expected amount of output is produced.

Hadoop Counters are also divided into groups. There are several groups of the built-in counters. Each group also either contains task counters or contain job counter.

Several groups of the built-in counters in Hadoop are as follows:

a) MapReduce Task Counter

Task counter collects specific information about tasks during its execution time. Which include the number of records read and written.

For example MAP_INPUT_RECORDS counter is the Task Counter. It also counts the input records read by each map task.

b) File System Counters

This Counter gathers information like a number of bytes read and written by the file system. The name and description of the file system counters are as follows:

- FileSystem bytes read– The number of bytes read by the filesystem.

- FileSystem bytes written– The number of bytes written to the filesystem.

c) FileInputFormat Counters

These Counters also gather information of a number of bytes read by map tasks via FileInputFormat.

d) FileOutputFormat counters

These counters also gather information of a number of bytes written by map tasks (for map-only jobs) or reduce tasks via FileOutputFormat.

e) Job Counters in MapReduce

Job counter measures the job-level statistics. It does not measure values that change while a task is running.

For example TOTAL_LAUNCHED_MAPS, count the number of map tasks that were launched over the course of a job. Application master also measures the Job counters.

So they don’t need to be sent across the network, unlike all other counters, including user-defined ones.

2. User-Defined Counters or Custom Counters in Hadoop MapReduce

In addition to built-in counters, Hadoop MapReduce permits user code to define a set of counters. Then it increment them as desired in the mapper or reducer. Like in Java to define counters it uses, ‘enum’ .

A job may define an arbitrary number of ‘enums’. Each with an arbitrary number of fields. The name of the enum is the group name. The enum’s fields are the counter names.

a) Dynamic Counters in Hadoop

Java enum’s fields are defined at compile time. So we cannot create new counters at run time using enums. So, we use dynamic counters to create new counters at run time. But dynamic counter is not defined at compile time.

Conclusion

Hence, Counters check whether it has read and written correct number of bytes. Counter also measures the progress or the number of operations that occur within MapReduce job.

Hadoop also maintains built-in counters and user-defined counters to measure the progress that occurs within MapReduce job.

Hope this blog helped you, If you have any query related to Hadoop Counter so, leave a comment in a section below.