Salient Features Of MapReduce – Importance of MapReduce

Apache Hadoop is a software framework that processes and stores big data across the cluster of commodity hardware. Hadoop is based on the MapReduce model for processing huge amounts of data in a distributed manner.

This MapReduce Tutorial enlisted several features of MapReduce. After reading this, you will clearly understand why MapReduce is the best fit for processing vast amounts of data.

First, we will see a small introduction to the MapReduce framework. Then we will explore various features of MapReduce.

Let us start with the introduction to the MapReduce framework.

Introduction to MapReduce

MapReduce is a software framework for writing applications that can process huge amounts of data across the clusters of in-expensive nodes. Hadoop MapReduce is the processing part of Apache Hadoop.

It is also known as the heart of Hadoop. It is the most preferred data processing application. Several players in the e-commerce sector such as Amazon, Yahoo, and Zuventus, etc. are using the MapReduce framework for high volume data processing.

Let us now study the various features of Hadoop MapReduce.

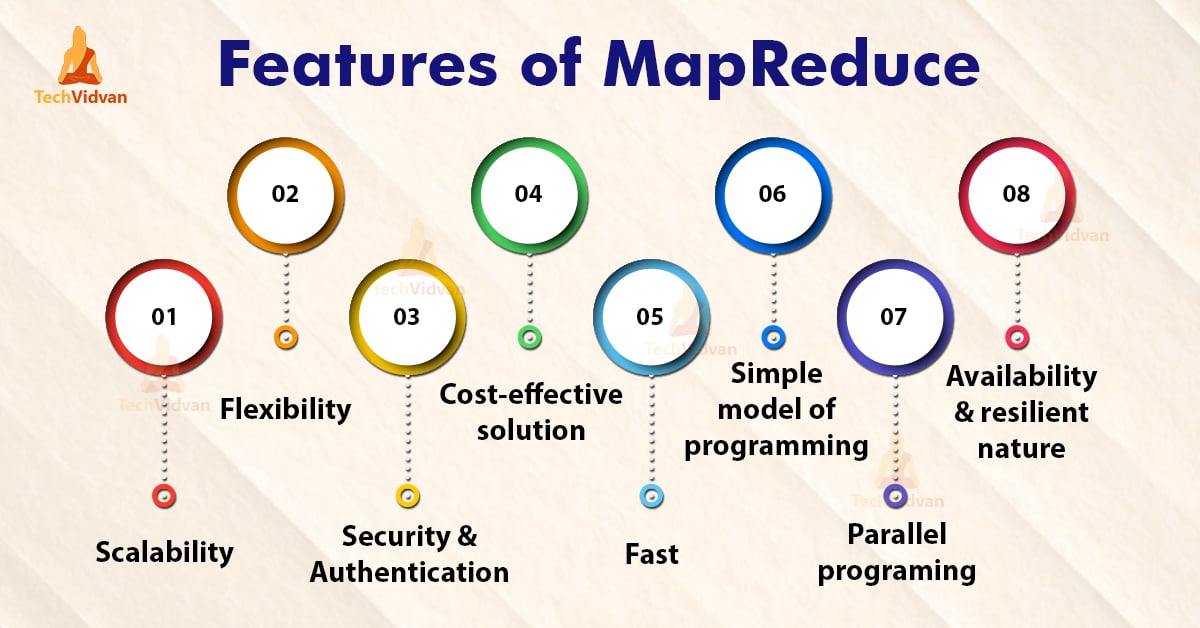

Features of MapReduce

1. Scalability

Apache Hadoop is a highly scalable framework. This is because of its ability to store and distribute huge data across plenty of servers. All these servers were inexpensive and can operate in parallel. We can easily scale the storage and computation power by adding servers to the cluster.

Hadoop MapReduce programming enables organizations to run applications from large sets of nodes which could involve the use of thousands of terabytes of data.

Hadoop MapReduce programming enables business organizations to run applications from large sets of nodes. This can use thousands of terabytes of data.

2. Flexibility

MapReduce programming enables companies to access new sources of data. It enables companies to operate on different types of data. It allows enterprises to access structured as well as unstructured data, and derive significant value by gaining insights from the multiple sources of data.

Additionally, the MapReduce framework also provides support for the multiple languages and data from sources ranging from email, social media, to clickstream.

The MapReduce processes data in simple key-value pairs thus supports data type including meta-data, images, and large files. Hence, MapReduce is flexible to deal with data rather than traditional DBMS.

3. Security and Authentication

The MapReduce programming model uses HBase and HDFS security platform that allows access only to the authenticated users to operate on the data. Thus, it protects unauthorized access to system data and enhances system security.

4. Cost-effective solution

Hadoop’s scalable architecture with the MapReduce programming framework allows the storage and processing of large data sets in a very affordable manner.

5. Fast

Hadoop uses a distributed storage method called as a Hadoop Distributed File System that basically implements a mapping system for locating data in a cluster.

The tools that are used for data processing, such as MapReduce programming, are generally located on the very same servers that allow for the faster processing of data.

So, Even if we are dealing with large volumes of unstructured data, Hadoop MapReduce just takes minutes to process terabytes of data. It can process petabytes of data in just an hour.

6. Simple model of programming

Amongst the various features of Hadoop MapReduce, one of the most important features is that it is based on a simple programming model. Basically, this allows programmers to develop the MapReduce programs which can handle tasks easily and efficiently.

The MapReduce programs can be written in Java, which is not very hard to pick up and is also used widely. So, anyone can easily learn and write MapReduce programs and meet their data processing needs.

7. Parallel Programming

One of the major aspects of the working of MapReduce programming is its parallel processing. It divides the tasks in a manner that allows their execution in parallel.

The parallel processing allows multiple processors to execute these divided tasks. So the entire program is run in less time.

8. Availability and resilient nature

Whenever the data is sent to an individual node, the same set of data is forwarded to some other nodes in a cluster. So, if any particular node suffers from a failure, then there are always other copies present on other nodes that can still be accessed whenever needed. This assures high availability of data.

One of the major features offered by Apache Hadoop is its fault tolerance. The Hadoop MapReduce framework has the ability to quickly recognizing faults that occur.

It then applies a quick and automatic recovery solution. This feature makes it a game-changer in the world of big data processing.

Summary

I hope after reading this article you clearly understood the various features of Hadoop MapReduce. The article enlisted various features of MapReduce. The MapReduce framework is scalable, flexible, cost-effective, and fast processing system.

It offers security, fault-tolerance, and authentication. MapReduce is a simple model of programming and offers parallel programming.