HDFS Data Block – Learn the Internals of Big Data Hadoop

In this Big data Hadoop tutorial, we are going to provide you a detailed description of Hadoop HDFS data block. First of all, we will cover what is data block in Hadoop, what is their importance, why the size of HDFS data blocks is 128MB.

We will also discuss the example of data blocks in hadoop and various advantages of HDFS in Hadoop.

Introduction to HDFS Data Block

Hadoop HDFS split large files into small chunks known as Blocks. Block is the physical representation of data. It contains a minimum amount of data that can be read or write. HDFS stores each file as blocks. HDFS client doesn’t have any control on the block like block location, Namenode decides all such things.

By default, HDFS block size is 128MB which you can change as per your requirement. All HDFS blocks are the same size except the last block, which can be either the same size or smaller.

Hadoop framework break files into 128 MB blocks and then stores into the Hadoop file system. Apache Hadoop application is responsible for distributing the data block across multiple nodes.

Example-

Suppose file size is 513MB, and we are using the default configuration of block size 128MB. Then, the Hadoop framework will create 5 blocks, first four blocks 128MB, but the last block will be of 1MB only.

Hence from the example it clear that it is not necessary that in HDFS each file stored should be an exact multiple of the configured block size 128mb, 256mb etc. Therefore final block for file uses only as much space as is needed.

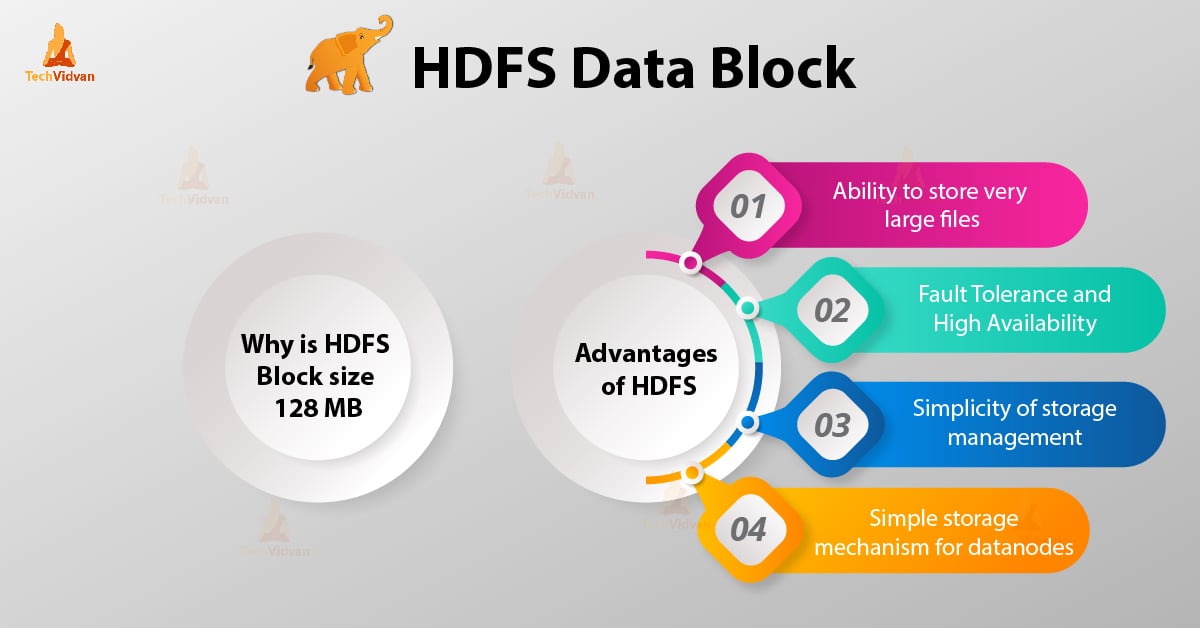

Why is HDFS Block size 128 MB?

HDFS store terabytes and petabytes of data. If HDFS Block size is 4kb like Linux file system, then we will have too many data blocks in Hadoop HDFS, hence too much of metadata.

So, maintaining and managing this huge number of blocks and metadata will create huge overhead and traffic which is something which we don’t want.

Block size can’t be so large that the system is waiting a very long time for one last unit of data processing to finish its work.

Advantages of HDFS

After learning what is HDFS Data Block, let’s now discuss the advantages of Hadoop HDFS.

1. Ability to store very large files

Hadoop HDFS store very large files which are even larger than the size of a single disk as Hadoop framework break file into blocks and distribute across various nodes.

2. Fault tolerance and High Availability of HDFS

Hadoop framework can easily replicate Blocks between the datanodes. Thus provide fault tolerance and high availability HDFS.

3. Simplicity of storage management

As HDFS has fixed block size (128MB), so it is very easy to calculate the number of blocks that can be stored on the disk.

4. Simple Storage mechanism for datanodes

Block in HDFS simplifies the storage of the Datanodes. Namenode maintains metadata of all the blocks. HDFS Datanode does not need to concern about the block metadata like file permissions etc.

Conclusion

Hence, HDFS data block is the smallest unit of data in a filesystem. The default size of the HDFS Block is 128MB which you can configure as per requirement. HDFS Blocks are easy to replicate between the datanodes. Hence, provide fault tolerance and high availability of HDFS.

For any query or suggestion related to Hadoop HDFS data blocks, do let us know by leaving a comment in a section given below.