Apache Spark SQL Tutorial : Quick Guide For Beginners

This blog completely aims to learn detailed concepts of Apache Spark SQL, supports structured data processing. Also, offers to work with datasets in Spark, integrated APIs in Python, Scala, and Java.

In this sparkSQL tutorial, we will explain components of Spark SQL like, datasets and data frames. Moreover, we will also cover Apache Spark SQL catalyst optimizer. To learn concept deeply, we will also study the need for Spark SQL in Spark.

By the end, we will go through Spark SQL advantage, and disadvantages to understand better.

Spark SQL Introduction

Spark SQL supports distributed in-memory computations on the huge scale. It is a spark module for structured data processing. It gives information about the structure of both data & computation takes place.

This extra information helps SQL to perform extra optimizations. The major aspect of Spark SQL is that we can execute SQL queries. It can also be used to read data from an existing hive installation. When SQL runs in another programming language, then results come as dataset/dataframe.

By using the command-line or over JDBC/ODBC, we can interact with the SQL interface.

Spark SQL offers three main capabilities for using structured and semi-structured data. They are following below:

- Spark SQL provides a dataframe abstraction in Python, Java, and Scala. It simplifies working with structured datasets. In Spark, SQL dataframes are same as tables in a relational database.

- Spark SQL can read and write data in various structured formats, such as JSON, hive tables, and parquet.

- By using SQL, we can query the data, both inside a Spark program and from external tools that connect to Spark SQL.

Same as Spark in Spark SQL, developers can switch back and forth between different APIs. Thus, it is the most natural way to express a given transformation.

Why Spark SQL Came Into Picture?

As Apache Hive, Spark SQL also originated to run on top of Spark and is now integrated with the Spark stack. There were certain limitations of Apache Hive as list-up below. Spark SQL was come into the picture to overcome these drawbacks and replace Apache Hive.

Limitations With Hive:

- As for executing the ad-hoc queries, Hive launches MapReduce jobs internally. When it comes to the analysis of medium-sized datasets (10 to 200 GB) MapReduce lags in the performance.

- There is no resume capability in Hive. So, if the processing dies in the middle of a workflow, we cannot resume from where it got stuck.

- When trash is enabled Hive, we cannot drop encrypted databases in cascade, that leads to an execution error. Afterwards, users have to use purge option to skip trash instead of drop.

Due to these drawbacks, Spark SQL performace is better and it comes in picture.

Spark SQL Datasets

It is an interface, provides the advantages of RDDs with the comfort of Spark SQL’s execution engine. It is a distributed collection of data to construct a dataset, we can use JVM objects. Afterwards, it can manipulate using functional transformations such as map, flatMap, filter, etc. and many more.

In two languages, dataset API is available like Scala and Java. R and Python do not support dataset API. But, as Python is very dynamic in nature. It provides many of the benefits of the dataset API, such as we can access the field of a row by name naturally row.columnName.

Spark SQL DataFrames

As similar as RDD, dataframes are also immutable in nature. In dataframes, data is organized into named columns. Also, they are similar to a relational database. It is designed large data sets processing even easier.

There are following ways to create dataframes such as:

- With structured data files

- From tables in Hive

- With external databases

- By using existing RDD

Dataframes provides richer optimizations to us. API of dataframes is available in Scala, Java, Python, and R as well. Dataframe is represented by a dataset of rows in Scala and Java. It is simply a type alias of dataset[Row] in Scala API.

Whereas in Java API, users need to use dataset<Row> to represent a dataframe. SQL provides a domain specific language API to manipulate our distributed data. It also makes Spark accessible to a wider audience, beyond specialized data engineers.

Features of Spark SQL DataFrame

Few characteristics of dataframe in spark are:

- It provides an ability to process the data in the size of kilobytes to petabytes. Even on a single node cluster to large cluster.

- Dataframes supports different data formats, such as Avro, csv, elastic search, and cassandra. It also supports storage systems like HDFS, HIVE tables, MySQL, etc.

- By using Spark-core, it can be easily integrated with all big data tools and frameworks.

- Dataframes provides API for Python, Java, Scala, as well as R programming.

Spark Catalyst Optimizer

We can say, most of the power of Spark SQL comes due to catalyst optimizer. It primarily leverages functional programming constructs of Scala such as pattern matching. Catalyst optimizer offers a general framework for transforming trees.

Those we can use to perform analysis, optimization, planning, and runtime code generation.

Generally, there are two primary goals of catalyst optimizer:

- It makes adding new optimization techniques easy.

- This optimizer also enables external developers to extend the optimizer.

Basically, in four phases, it uses catalyst’s transformation framework such as :

- By analyzing a logical plan to resolve references

- With logical plan optimization

- By physical planning

- With code generation to compile the parts of the query to Java bytecode

In addition, it optimizes all the queries written in Spark SQL and dataframe DSL. It enhances the speed of the system. Optimizer helps us to run queries much faster than their counter RDD part. As much as fast speed, it will have more performance it will increase.

There is a library “Spark Catalyst” as a rule-based system. Each rule focusses on the specific optimization. Likewise, constantfolding focus on eliminating constant expression from the query.

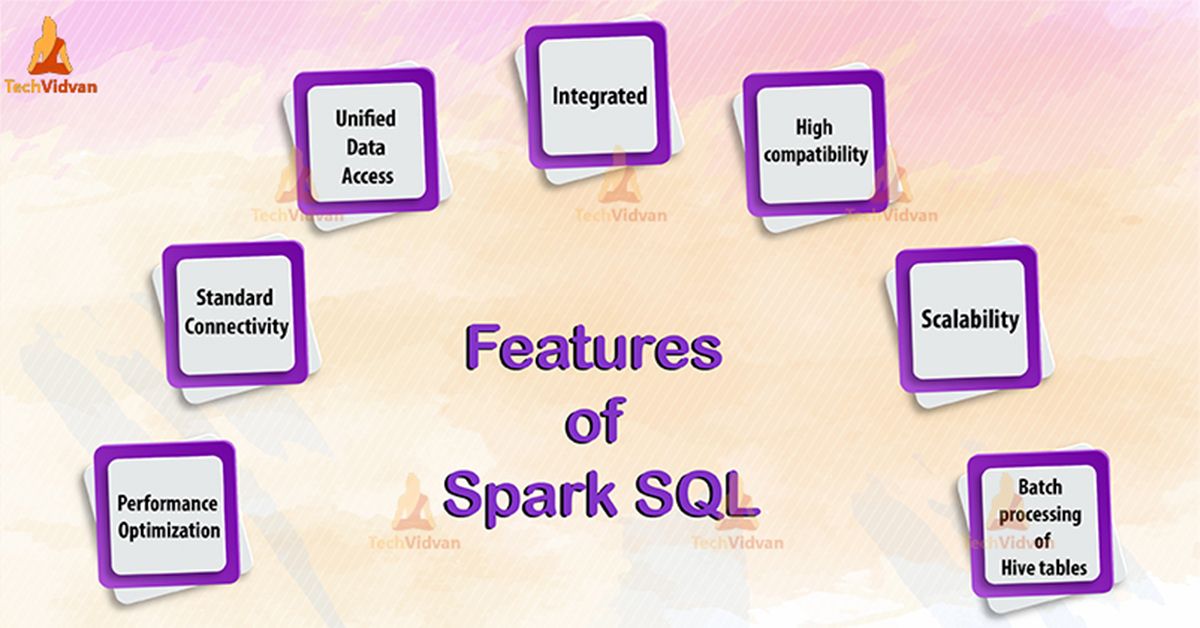

Features of Spark SQL

1. Spark SQL is Integrated

With integrated APIs in Python, Scala etc. we query structured data as RDD in Spark. Due to integration, we can easily run SQL queries alongside complex analytic algorithms.

2. Unified data access

For working with structured data, Schema-RDDs provide a single interface. Also, includes Apache Hive tables, parquet files, and JSON files.

3. Hive compatibility

It reuses the Hive front-end and meta store. In SparkSQL, we can have full compatibility with current Hive data, queries and UDFs.

4. Standard connectivity

Offers server mode with the connectivity of industry standard JDBC and ODBC.

5. Scalability

We can use the same engine for both interactive as well as long queries.

6. Performance optimization

In SparkSQL, each SQL query converts to a logical plan. This is possible by using the query optimization engine. Moreover, it converts to many physical execution plans. It selects the most optimal physical plan for execution of the entire plan.

7. For batch processing of Hive tables

For fast batch processing of Hive tables, we can use Spark SQL.

Uses of Spark SQL

- Most importantly, it executes SQL queries.

- We can read data from existing Hive installation by using SparkSQL.

- While we run SQL, at another programming language, it results in a dataset/dataframe.

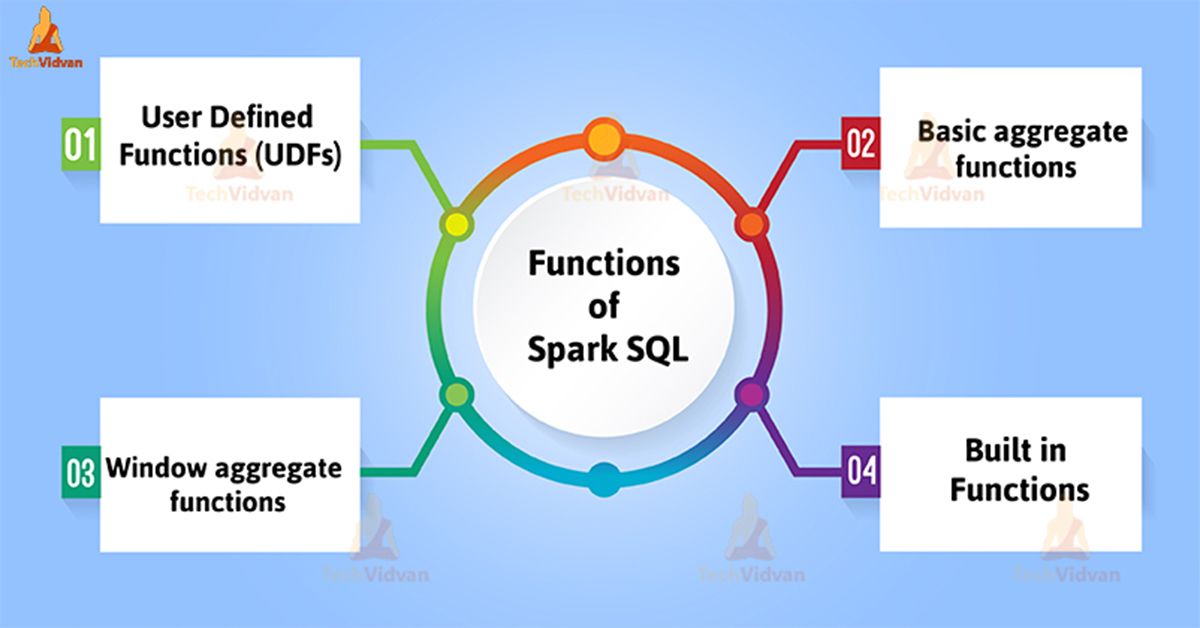

Functions defined by Spark SQL

There are following functions defined by Spark SQL, such as :

1. User Defined Functions (UDFs)

It takes values from a single row as input. Afterwards, it generates a single return value for every input row.

2. Basic aggregate functions

It operates on a group of rows, then calculates a single return value per group.

3. Window aggregate functions

It operates on a group of rows. It also calculates a single return value for each row in a group.

4. Built-in function

Spark SQL offers a built-in function to process the column value.

By using the following command we can access built-in function:

Import org.apache.spark.sql.functions

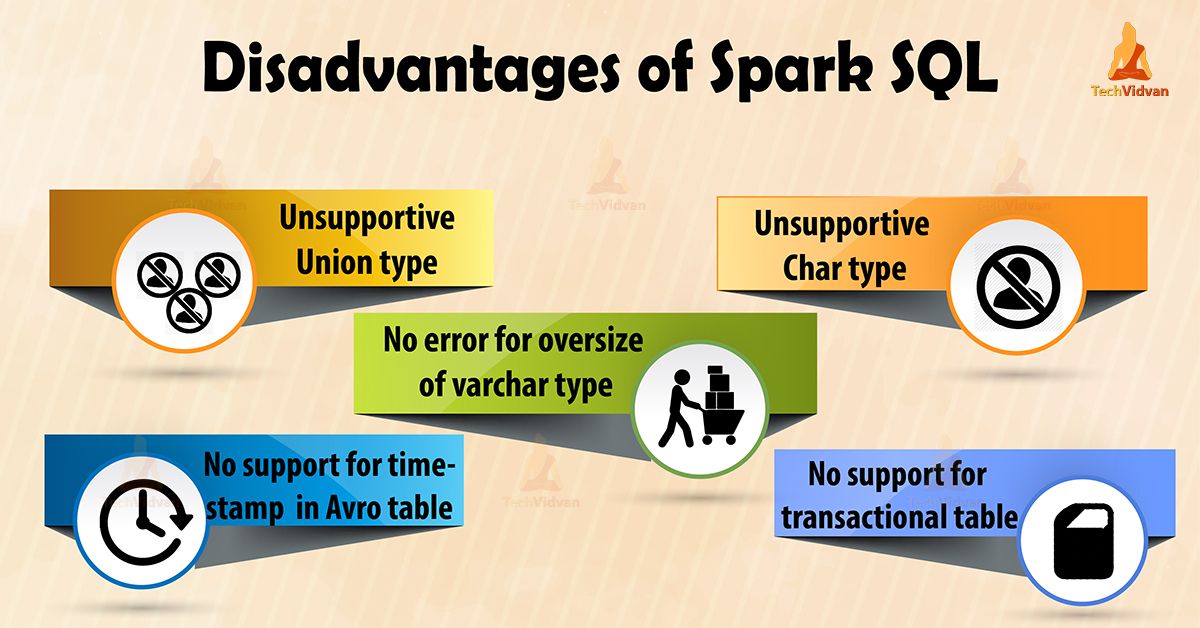

Disadvantages of Spark SQL

There are also several disadvantages of working with SparkSQL. Let’s discuss them one by one:

1. Unsupportive union type

It is impossible to create or read a table containing union fields.

2. No error for oversize of varchar type

While we work with SparkSQL, it considers varchar as a string. Therefore it has no size limit.

3. No support for transactional table

In SQL, Hive transactions are not supported.

4. Unsupportive char type

In SparkSQL char type (fixed-length strings) are not supported.

Conclusion

As a result, Spark SQL provides the most natural way to express the structured data. It is a module of Apache Spark which analyses the structured data. Working with SQL Spark enhances the compatibility of the system.

As discussed earlier, SparkSQL provides scalability and also offers standard connectivity. Hence, it enhances the efficiency of the system.