Limitations of Apache Spark-Ways To Overcome Spark Limitations

As we very well know that Apache Spark is the lightning fast big data solution. Somehow, it has revealing development API’s. Spark allows data workers to do streaming, it requires continuous access to datasets.

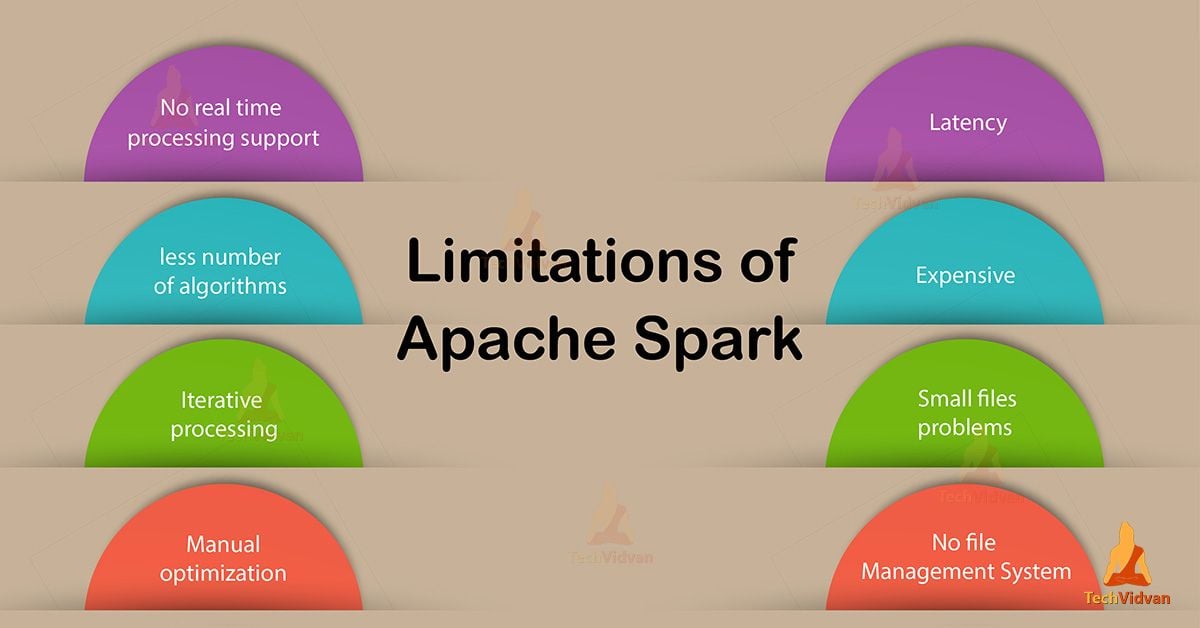

While working on Apache Spark there are some limitations that everyone is facing. This document totally aims at limitations of Apache Spark or disadvantages of Apache Spark.

Some are like real-time processing, issue of small file, no file management system & more. In this blog, we will cover each limitations of Spark and understand them in detail.

We will also learn how to overcome the limitations/drawbacks of Spark.

What are the limitations of Apache Spark

In Apache foundation, Apache Spark is one of the trending projects. So many, Hadoop projects are moving from MapReduce to Apache Spark side. As Spark overcomes some main problems in MapReduce, but there are various drawbacks of Spark.

Hence, industries have started shifting to Apache Flink to overcome Spark limitations.

Now let’s discuss limitations of Apache Spark in detail:

1. No File Management system

Spark has no file management system of its own. It does not come with its own file management system. It generally depends on some other file management systems. So, it needs to merge with one — if not HDFS, then another cloud-based data platform. This is one of the fundamental issues of Spark.

2. No Support for Real-Time Processing

Spark does not support complete Real-time Processing. By Spark streaming, the live data which arrives is automatically divided into batches. Those batches are of the pre-defined interval, then each batch of data is handled as Spark RDDs.

Afterwards, these RDDs processed using the operations like map, reduce, join & many more. As data is divided into batches their results are also returned in batches.

This means in Spark streaming micro-batch processing takes place, so this process indicates that spark is near real-time processing of live data.

3. Small File Issue

As earlier, while we worked with Hadoop there was a major issue of small Files. That HDFS provides a limited number of large files instead of a large number of small files.

Again, if we use spark with HDFS, the same issue occurs. But their different pattern we use that we store all the data zipped in S3, that comes as a great pattern.

Now issue arises when there are small zipped files, then spark needs to uncompress those files and also collect those files over the network. As zipped files can only be uncompressed if the complete file is at one core. As a result, we have to spend a lot of time simply burning cores unzipping files in sequence.

However, this long process affects our processing. As if we demand efficient processing, we require extensive shuffling over the network.

4. Cost-Effective

While we talk about the cost-efficient processing of big data, but keeping data in memory is not easy. At the time we work with Spark, the memory consumption is very high. Spark requires huge RAM to process in memory.

The additional memory to run Spark costs very high so in-memory can be quite expensive. Even if compared to the relatively low cost of disk space and the option to run Hadoop MapReduce. Hence, it is not handled in a user-friendly manner. As a result cost of Spark is very high.

5. Window Criteria

As we know in Spark, data divides into small batches of a pre-defined time interval. So Apache Spark did not support record based window criteria. It offers time-based window criteria.

6. Latency

Apache Spark has higher latency and lower throughput. While in comparison with Apache Flink, Flink has lower latency and higher throughput.

7. Less number of Algorithms

In Apache Spark Machine learning Spark MLlib, there are fewer algorithms present. It lags behind in terms of a number of available algorithms. Such as tanimoto distance.

8. Iterative Processing

“Iterative” means reuse intermediate results. So in Apache Spark data iterates in batches and we can say here, each iteration is a plan and executes separately.

9. Manual Optimization

While we work in Spark, job requires being manually optimized. It is also adequate to specific datasets. As if we want to make partitions, we can set a number of spark partitions by our own.

To set by own, we need to pass a number of partition as the second parameter in parallelize method. In certain, as we want to partition and cache in Spark to be correct, it must be controlled manually.

10. Back Pressure Handling

In Apache Spark, handling of pressure implicitly is not possible. Rather than we done it manually. It is build up of data at an input-output when the buffer is full and not able to receive the more incoming data. Until the buffer is empty we cannot transfer any data from it.

Conclusion

However, Spark makes it easy to write and run complicated data processing. It enables computation of tasks at a very large scale. Although spark has many limitations, it is still trending in the big data world.

Due to these drawbacks, many technologies are overtaking Spark. Such as Flink offers complete real-time processing than the spark. In this way somehow other technologies overcoming the drawbacks of Spark.