Spark Streaming Window Operations- A Quick Guide

Spark streaming leverages advantage of windowed computations in Apache Spark. It offers to apply transformations over a sliding window of data. In this article, we will learn the whole concept of Apache spark streaming window operations.

Moreover, we will also learn some Spark Window operations to understand in detail.

What are Spark Streaming Window operations

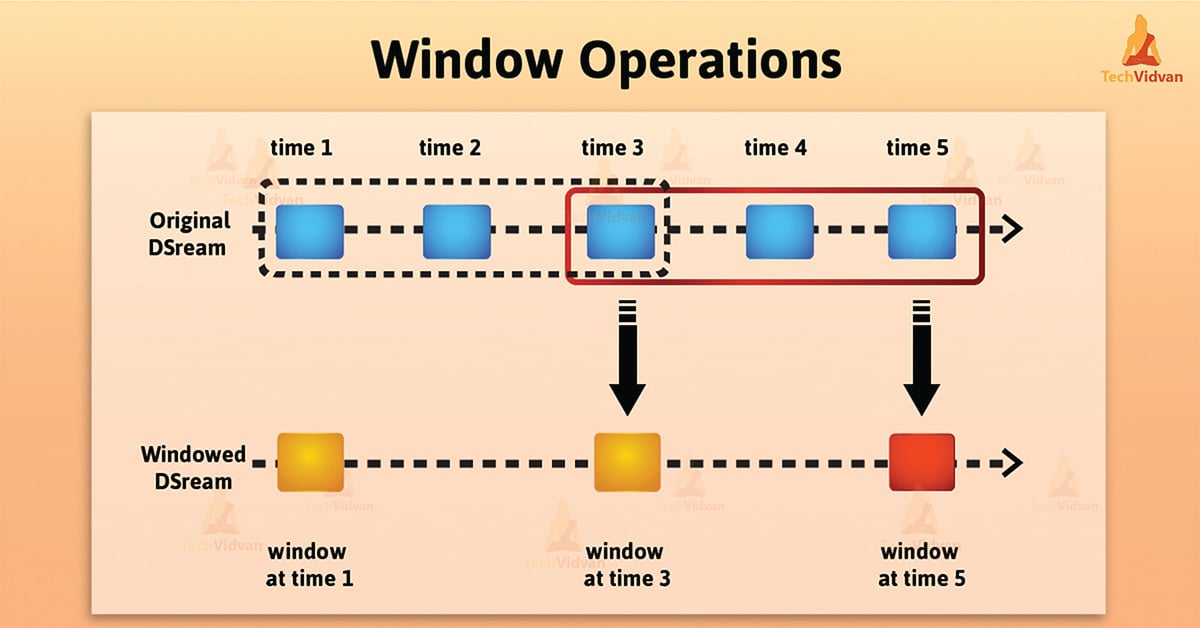

Spark streaming leverages advantage of windowed computations in spark. It offers to apply transformations over a sliding window of data. The figure mentioned below explains this sliding window.

As window slides over a source DStream, the source RDDs that fall within the window are combined. It also operated upon which produces spark RDDs of the windowed DStream. Hence, In this specific case, the operation is applied over the last 3 time units of data, also slides by 2-time units.

Basically, any Spark window operation requires specifying two parameters.

- Window length – It defines the duration of the window (3 in the figure).

- Sliding interval – It defines the interval at which the window operation is performed (2 in the figure).

However, these 2 parameters must be multiples of the batch interval of the source DStream.

Let’s understand this operation with an example.

For example:

import org.apache.spark._

import org.apache.spark.streaming._

import org.apache.spark.streaming.StreamingContext._ // not necessary since Spark 1.3

// Create a local StreamingContext with two working thread and batch interval of 1 second.

// The master requires 2 cores to prevent a starvation scenario.

val conf1 = new SparkConf().setMaster("local[2]").setAppName("NetworkWordCount")

val ssc1 = new StreamingContext(conf1, Seconds(1))

To enhance above example by generating word counts over the last 30 seconds of data, every 10 seconds. We need to apply the reduceByKey operation on the pairs DStream of (word, 1) pairs over the last 30 seconds of data.

Basically, it is possible by using the operation reduceByKeyAndWindow.

// Reduce last 30 seconds of data, every 10 seconds val windowedWordCounts = pairs.reduceByKeyAndWindow((a:Int,b:Int) => (a + b), Seconds(30), Seconds(10))

Common Spark Window Operations

These operations describe two parameters – windowLength and slideInterval.

1. Window (windowLength, slideInterval)

Window operation returns a new DStream. On the basis of windowed batches of the source DStream, it gets computed.

2. CountByWindow (windowLength, slideInterval)

In the stream, countByWindow operation returns a sliding window count of elements.

3. ReduceByWindow (func, windowLength, slideInterval)

ReduceByWindow returns a new single-element stream, that is created by aggregating elements in the stream over a sliding interval using func. However, a function must be commutative and associative, so that it can be computed correctly in parallel.

4. ReduceByKeyAndWindow(func, windowLength, slideInterval, [numTasks])

Whenever we call reduceByKeyAndWindow window on a DStream of (K, V) pairs, it returns a new DStream of (K, V) pairs. Here, we aggregate values of each key, by given reduce function func over batches in a sliding window.

In addition, it uses spark’s default number of parallel tasks, for grouping purpose. Like for local mode, it is 2. While in cluster mode it determines number using spark.default.parallelism config property. To set a different number of tasks, it passes an optional numTasks argument.

5. ReduceByKeyAndWindow (func, invFunc, windowLength, slideInterval, [numTasks])

It is the more efficient version of the above reduceByKeyAndWindow(). As in above one, we calculate the reduced value of each window by using the reduce values of the previous window.

However, here calculations take place by reducing the new data. For calculating, it reduces data which enters the sliding window. Also performs “inverse reducing” of the old data which leaves the window.

Note: Checkpointing must be enabled for using this operation.

6. CountByValueAndWindow(windowLength, slideInterval, [numTasks])

While, we call countByValueAndWindow on a DStream of (K, V) pairs, it returns a new DStream of (K, Long) pairs. Here, the value of each key is its frequency within a sliding window. In one case it is very similar to reduceByKeyAndWindow operation. Here also, we can configure the number of reduce tasks by an optional argument.

Conclusion

As a result, we have seen how spark windowing helps to apply transformations over a sliding window of data. Hence, this feature makes very easy to compute stats for a window of time.

Therefore, it increases the efficiency of the system. Ultimately, we have learned the whole about spark streaming window operations in detail.