Rising Big Data Technologies You Must Know

In this article, we will see the latest and the topmost big data technologies for dealing with the ever-rising big data.

First, we will see the rising trends in big data and then we will explore different big data technologies like Apache Hadoop, Apache Spark, Apache Flume, Kafka, NoSQL, MongoDB, Tableau, and many more.

Rising Big Data

Data is the new science. Big Data holds the answers. – By Pat Gelsinger

The term “rising Big Data” describes the exponential expansion of substantial and intricate datasets produced from many sources. There are a lot of opportunities and difficulties presented by this increase in data volume, velocity, and diversity. The management and processing of enormous datasets, protecting data security and privacy, and controlling data quality are challenges that organisations must contend with.

The potential provided by big data, on the other hand, includes those for data-driven insights, individualised services, scientific advancements, and predictive analytics. Businesses and organisations must make investments in cutting-edge data management infrastructure, implement effective data processing methods, and give data privacy and security first priority if they are to fully realise the promise of the growing big data market.

It is essential to have data specialists with training in data analysis and data science if you want to get the most out of the quantity of information at your disposal.

A comprehensive strategy that incorporates technology, qualified human resources, and ethical data practises is needed to address these issues and take advantage of the potential presented by the growth of big data. Organisations may gain a competitive edge, spur innovation, and make informed choices that have a beneficial influence on many facets of society and business by using big data ethically and creatively.

We all must be curious to know the big data technologies. So let us see the big data technologies embraced by the fortune companies and startups to gain business profits and many more.

Big Data Technologies

Big Data technologies are the software utility designed for analyzing, processing, and extracting information from the unstructured large data which can’t be handled with the traditional data processing software.

Companies required big data processing technologies to analyze the massive amount of real-time data. They use Big Data technologies to come up with Predictions to reduce the risk of failure.

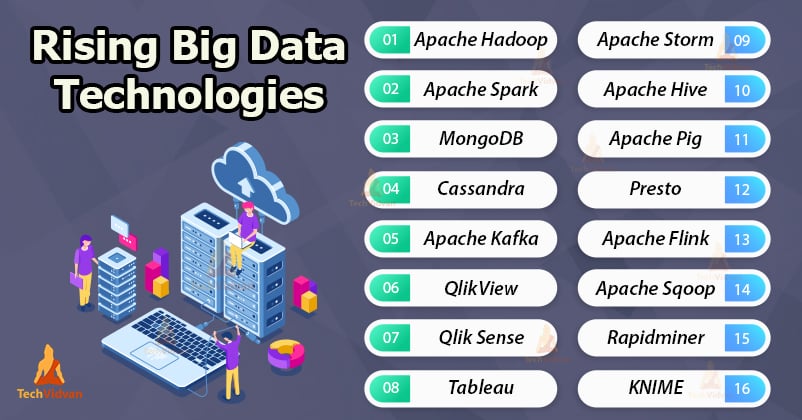

The topmost big data technologies are:

1. Apache Hadoop

It is the topmost big data tool. Apache Hadoop is an open-source software framework developed by Apache Software foundation for storing and processing Big Data. Hadoop stores and processes data in a distributed computing environment across the cluster of commodity hardware.

Hadoop is the in-expensive, fault-tolerant and highly available framework that can process data of any size and formats. It was written in JAVA and the current stable version is Hadoop 3.1.3. The Hadoop HDFS is the most reliable storage on the planet.

Features Apache Hadoop:

- It is scalable and fault-tolerant.

- The framework is designed in such a way that it can work even in unfavorable conditions like a machine crash.

- The framework stores data across commodity hardware that makes Hadoop cost-effective.

- Hadoop stores and processes data in a distributed manner. This data is processed parallelly resulting in fast data processing.

Companies using Hadoop are Facebook, LinkedIn, IBM, MapR, Intel, Microsoft, and many more.

2. Apache Spark

Apache Spark is another popular open-source big data tool designed with the goal to speed up the Hadoop big data processing. The main objective of the Apache Spark project was to keep the advantages of MapReduce’s distributed, scalable, fault-tolerant processing framework and make it more efficient and easier to use.

It provides in-memory computing capabilities to deliver Speed. Spark supports both real-time as well as batch processing and provides high-level APIs in Java, Scala, Python, and R.

Features of Apache Spark:

- Spark has the ability to run applications in Hadoop clusters 100 times faster in memory and ten times faster on the disk.

- Apache Spark can work with different data stores (such as OpenStack, HDFS, Cassandra) due to which it provides more flexibility than Hadoop.

- Spark contains an MLib library that offers a dynamic group of machine algorithms such as Clustering, Collaborative, Filtering, Regression, Classification, etc.

- Apache Spark can run on Hadoop, Kubernetes, Apache Mesos, standalone, or in the cloud.

3. MongoDB

MongoDB is an open-source data analysis tool developed by MongoDB in 2009. It is a NoSQL, document-oriented database written in C, C++, and JavaScript and has an easy setup environment.

MongoDB is one of the most popular databases for Big Data. It facilitates the management of unstructured or semi-structured data or the data that changes frequently.

MongoDB executes on MEAN software stack, NET applications, and Java

platforms. It is also flexible in cloud infrastructure.

Features of MongoDB:

- It is highly reliable, as well as cost-effective.

- It has a powerful query language that provides support for aggregation, geo-based search, text search, graph search.

- Supports ad hoc queries, indexing, sharding, replication, etc.

- It has all the powers of the relational database.

Companies like Facebook, eBay, MetLife, Google, etc. use MongoDB.

4. Apache Cassandra

Apache Cassandra is an open-source, decentralized, distributed NoSQL(Not Only SQL) database which provides high availability and scalability without compromising the performance efficiency.

It is one of the biggest Big Data tools that can accommodate structured as well as unstructured data. It employs Cassandra Structure Language (CQL) to interact with the database.

Cassandra is the perfect platform for the mission-critical data because of its linear-scalability and fault-tolerance on the in-expenisive hardware or the cloud infrastructure.

Features Apache Cassandra:

- Due to Cassandra’s decentralized architecture, there is no single point of failure in a cluster.

- It is highly fault-tolerant and durable.

- Cassandra’s performance is able to scale linearly with the addition of nodes.

- It outperforms popular NoSQL alternatives in real applications.

Companies like Instagram, Netflix, GitHub, GoDaddy, eBay, Hulu, etc. use Cassandra.

5. Apache Kafka

Apache Kafka is an open-source distributed streaming platform developed by Apache Software Foundation. It is a publish-subscriber based fault-tolerant messaging system and a robust queue capable of handling large volumes of data.

It allows us to pass the message from one point to another. Kafka is used for building real-time streaming data pipelines and real-time streaming applications. Kafka is written in Java and Scala.

Apache Kafka integrates very well with Spark and Storm for real-time streaming data analysis.

Features of Apache Kafka:

- Kafka can work with huge volumes of data easily.

- Kafka is highly scalable, distributed and fault-tolerant.

- It has high throughput for both publishing and subscribing messages.

- It guarantees zero downtime and no data loss.

Companies like LinkedIn, Twitter, Yahoo, Netflix, etc use Kafka.

Splunk captures, correlates, and indexes data from the searchable repository and generates insightful graphs, reports, alerts, and dashboards.

Features:

- Support for real-time data processing.

- It takes input data in any format like JSON, .csv, config files, log files, and others.

- Using Splunk, one can monitor business metrics and makes informed decisions.

- With Splunk, we can analyze the performance of any IT system.

- We can incorporate AI into our data strategy through Splunk.

Companies like JPMorgan Chase, Wells Fargo, Verizon, Domino’s, Porsche, etc use Splunk.

6. QlikView

QuickView is the fastest evolving BI and data visualization tool. It is the best BI tool for transforming raw data into knowledge. QuickView allows users to generate business insights by exploring how data is associated with each other and which data is not related.

QuickView brings a whole new level of analysis, values, and insights to existing data stores with simple, clean, and straightforward user interfaces. It enables users to conduct direct or indirect searches on all data anywhere in the application.

When the user clicks on a data-point, no queries are fired. All the other fields filter themselves based on user selection. It promotes unrestricted analysis of data, thus helping users to make accurate decisions.

Features of QuickView:

- It provides an in-memory storage feature that makes data collection, integration, and analysis process very fast.

- It works on Associative data modeling.

- The QuickView software automatically derives the relationship between data.

- It provides powerful and global data discovery.

- Support for social data discovery and mobile data discovery.

7. Qlik Sense

It is a data analysis and data visualization tool. Qlik Sense operates with an associative QIX engine that enables users to associate and link data from different sources and perform dynamic searching and selections.

It is used as a data analytic platform by technical as well as non-technical users. One who is looking for the tool for showing and analyzing data in the best possible way, then the Qlik Sense is the best choice.

With a drag and drop interface, the user can easily create an analytical report that is easy to understand and is in the form of a story. The client team can share applications and reports on a centralized hub, export the data stories to enhance the business, and share secure data models.

Features of Qlik Sense:

- Qlik Sense uses the associative model.

- It has a centralized hub or dashboard where all the files and reports generated using Qlik software can be shared.

- Qlik sense can be embedded into the application and captures data from it.

- It conducts in-memory data comparisons.

- It has a ‘smart search’ feature which helps in analyzing data by interacting with the charts and visualizations.

8. Tableau

Tableau is a powerful data visualization and software solution tools in the Business Intelligence and analytics industry.

It is the perfect tool for transforming the raw data into an easily understandable format without any technical skill and coding knowledge.

Tableau allows users to work on the live datasets and turns the raw data into valuable insights and enhances the decision-making process.

It offers a rapid data analysis process, which results in visualizations that are in the form of interactive dashboards and worksheets. It works in synchronization with the other Big Data tools.

Features of Tableau:

- In Tableau, with simple drag and drop, one can make visualizations in the form of a Bar chart, Pie chart, Histogram, Treemap, Boxplot, Gantt chart, Bullet chart, and many more.

- Tableau offers a large option of data sources ranging from on-premise files, Text files, CSV, Excel, relational databases, spreadsheets, non-relational databases, big data, data warehouses, to on-cloud data.

- It is highly robust and secure.

- It allows the sharing of data in the form of visualizations, dashboards, sheets, etc. in real-time.

9. Apache Storm

It is a distributed real-time computational framework. Apache Storm is written in Clojure and Java. With Apache Storm, we can reliably process our unbounded streams of data. It is a simple tool and can be used with any programming language.

We can use Apache Storm in real-time analytics, continuous computation, online machine learning, ETL, and more.

Features of Storm:

- It is free and open-source.

- It is highly scalable.

- Storm is fault-tolerant and easy to set up.

- Apache Storm guarantees data processing.

- It has the capability to process millions of tuples per second per node.

Companies like Yahoo, Alibaba, Groupon, Twitter, Spotify use Apache Storm.

10. Apache Hive

Hive is an open-source data warehousing tool for analyzing Big Data. Hive uses Hive Query Language which is similar to SQL for querying unstructured data.

It is built on the top of Hadoop and enables developers to perform processing on data stored in Hadoop HDFS without writing the complex MapReduce jobs. Users can interact with Hive through CLI (Beeline Shell).

Features of Apache Hive:

- Hive provides support for all the client applications written in different languages.

- It reduces the overhead of writing complex MapReduce jobs.

- HQL syntax is similar to SQL. Thus, one who is familiar with SQL can easily write Hive queries.

11. Apache Pig

It is an alternative approach for making MapReduce jobs easier. A pig was developed by Yahoo for providing ease in writing the Hadoop MapReduce programs. Pig enables developers to use Pig Latin, which is a scripting language designed for pig framework that runs on Pig runtime.

Pig Latin is SQL like commands that are converted to MapReduce program in the background by the compiler. It translates the Pig Latin into MapReduce program for performing large scale data processing in YARN.

Features of Pig:

- Pig allows users to create their own function for performing specific purpose processing.

- It is best suited for solving complex use cases.

- It handles data of all kinds.

12. Presto

Presto is an open-source query engine (SQL-on-Hadoop) developed by Facebook for running interactive analytic queries against petabytes of data. It allows querying the data where it lives, including Cassandra, Hive, proprietary data stores, or relational databases.

A single Presto query can merge data from different sources and perform analytics across the entire organization. It does not depend on Hadoop MapReduce techniques and can retrieve data very quickly within sub-seconds to minutes.

Features of Presto:

- It is easy to install and debug.

- Presto has a simple and extensible architecture.

- Provide support for user-defined functions.

13. Apache Flink

Apache Flink is an open-source distributed processing engine designed for stateful computations over bounded and unbounded data streams.

It is written in Java and Scala. Apache Flink can run in all common cluster environments and performs computations in-memory.

It doesn’t have any single point of failure.

Features of Flink:

- Flink delivers high throughput and low latency.

- It can be scaled to thousands of cores and terabytes of application state.

- Flink is used with stream processing applications like Event-Driven applications, Data Analytics applications, Data pipeline applications.

Companies, including Alibaba, Bouygues Telecom, BetterCloud, etc. uses Apache Flink.

14. Apache Sqoop

Apache Sqoop is an open-source top-level project at Apache. It is a tool designed for transferring huge amounts of data between Apache Hadoop and structured datastores. The structured datastores are relational databases such as MySQL, Oracle, etc.

When we want to import data to HDFS from the relational database or export data from HDFS to relational database, then we can use Sqoop.

Features of Sqoop:

- Sqoop offers connectors for all the major relational databases.

- With Sqoop we can load all the tables of the database with a single command.

- Apache Sqoop provides the facility of incremental load where we can load the parts of the table when it is updated.

15. Rapidminer

RapidMiner is one of the most used tools for implementing Data Science. In 2017, it was ranked 1 at Gartner Magic Quadrant for Data Science Platform. It is a powerful data mining tool for building predictive models.

Rapidminer is all in one tool which features data preparation, machine learning, and deep learning.

Features of RapidMiner:

- It offers a single platform for data processing, and building Machine Learning models and deployment.

- It supports the integration of the Hadoop framework with its in-built RapidMiner Radoop.

- RapidMiner can generate predictive models through automated modeling.

16. KNIME

The KNIME (Konstanz Information Miner) is an open-source data analytics platform for data analysis and business intelligence. It is written in Java.

It allows users to visually create Data Flows, selectively execute analysis steps, inspect results, interactive views, and models. KNIME is a good alternative for SAS.

Features of KNIME:

- It offers simple ETL operations.

- We can integrate KNIME with other languages and technologies.

- It offers a broad spectrum of integrated tools and advanced algorithms.

- KNIME is easy to set up.

- It does not have any stability issues.

17. Elasticsearch

Elasticsearch is a Lucene-based search engine. It is an open-source database server developed in Java. Elasticsearch is used for performing full-text-search and analysis with an HTTP Web Interface and JSON document.

It takes unstructured data from different sources and stores them in a sophisticated format which is highly optimized for language-based searches.

Features of Elasticsearch:

- It is reliable and very easy to scale.

- It offers high speed even when searching in very large data sets.

- Elasticsearch offers simple RESTful APIs and uses schema-free JSON documents which makes searching, indexing, and querying the data easy.

- It is schema-free because it accepts JSON documents.

Summary

So in this article, we have seen many big data technologies like Apache Hadoop, Apache Spark, MongoDB, Cassandra, and many more.

The article also enlisted data visualization tools like QlikView, Qlik Sense, Tableau. We have also seen some other big data technologies like Apache Hive, Pig, Storm, Flink, and many more.

I hope after reading this article you are now aware of the topmost big data technologies and the reasons why we use them. Now, it is your time to make your move according to your requirements.